Security

Introduction

With how much of our lives happen online these days - whether it’s staying in touch, following the news, buying, or even selling products online - web security has never been more important. Unfortunately, the more we rely on these online services, the more appealing they become to malicious actors. As we’ve seen time and time again, even a single weak spot in the systems we depend on can lead to disrupted services, stolen personal data, or worse. The past two years have been no exception, with a rise in Denial-of-Service (DoS) attacks, bad bots, and supply-chain attacks targeting the Web like never before.

In this chapter, we take a closer look at the current state of web security by analyzing the protections and security practices used by websites today. We explore key areas like Transport Layer Security (TLS), cookie protection mechanisms, and safeguards against third-party content inclusion. We’ll discuss how security measures like these help prevent attacks, as well as highlight misconfigurations that can undermine them. Additionally, we examine the prevalence of harmful cryptominers and the usage of security.txt.

We also investigate the factors driving security practices, analyzing whether elements like country, website category, or technology stack influence the security measures in place. By comparing this year’s findings with those from the 2022 Web Almanac, we highlight key changes and assess long-term trends. This allows us to provide a broader perspective on the evolution of web security practices and the progress made over the years.

Transport security

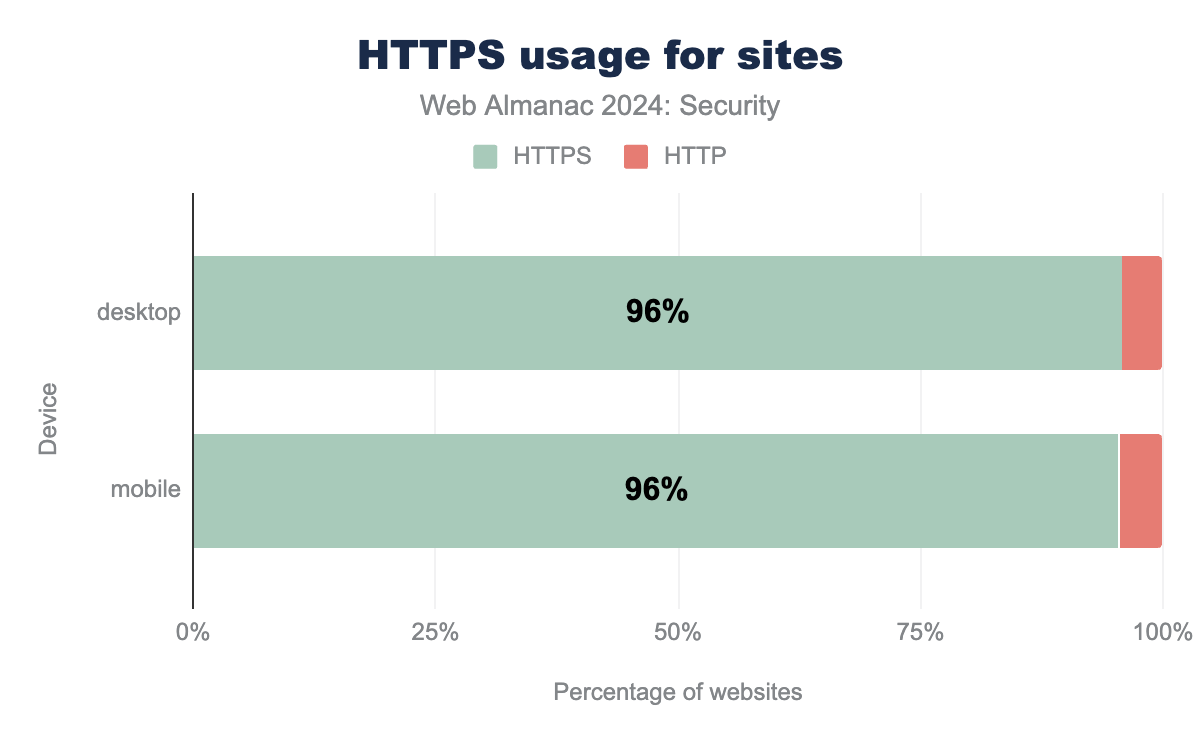

HTTPS uses Transport Layer Security (TLS) to secure the connection between client and server. Over the past years, the number of sites using TLS has increased tremendously. As in previous years, adoption of TLS continued to increase, but that increase is slowing down as it closes in to 100%.

The number of requests served using TLS climbed another 4% to 98% on mobile since the last Almanac in 2022.

The number of homepages served over HTTPS on mobile increased from 89% to 95.6%. This percentage is lower than the number of requests served over HTTPS due to the high number of third-party resources websites load, which are more likely to be served over HTTPS.

Protocol versions

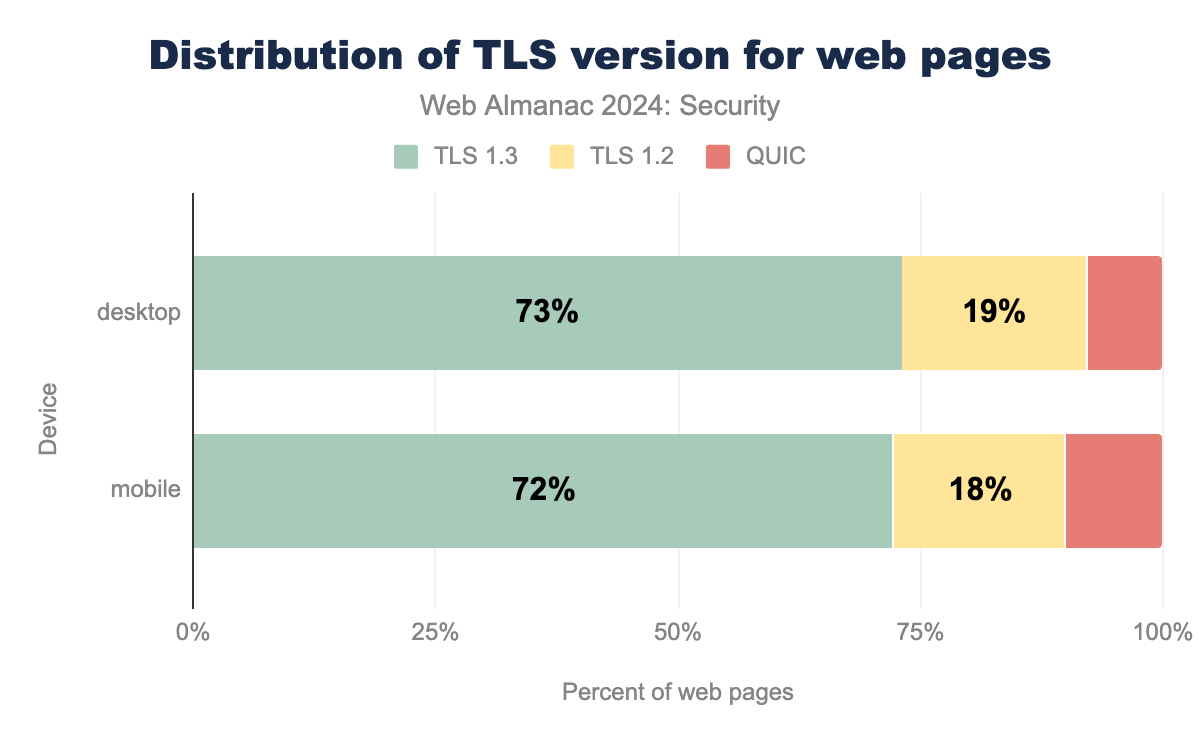

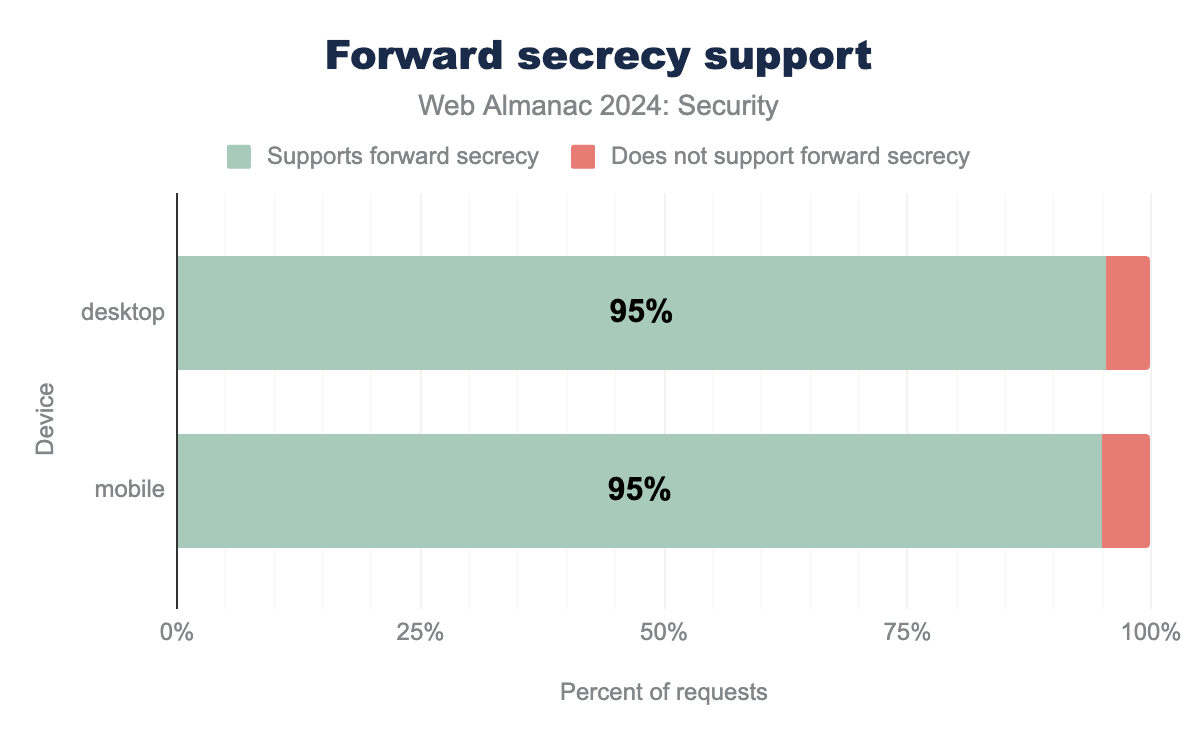

Over the years, multiple new versions of TLS have been created. In order to remain secure, it is important to use an up to date version of TLS. The latest version is TLS1.3, which has been the preferred version for a while. Compared to TLS1.2, version 1.3 deprecates some cryptographic protocols still included in 1.2 that were found to have certain flaws and it enforces perfect forward secrecy. Support for older versions of TLS have long been removed by major browser vendors. QUIC (Quick UDP Internet Connections), the protocol underlying HTTP/3 also uses TLS, providing similar security guarantees as TLS1.3.

TLSv1.3, while 19% use TLSv1.2 and 8% use QUIC. On mobile the figures are 72%, 18% and 10% respectively.We find that TLS1.3 is supported and used by 73% of web pages. The use of TLS1.3 overall has grown, even though QUIC has gained significant use compared to 2022, moving from 0% to almost 10% of mobile pages. The use of TLS1.2 continues to decrease as expected. Compared to the last Almanac it decreased by more than 12% for mobile pages, while TLS1.3 has increased by a bit over 2%. It is expected that the adoption of QUIC will continue to rise, as the use of TLS1.2 will continue to decrease.

We assume most websites don’t move from TLS1.2 directly to QUIC, but rather that most sites using QUIC migrated from TLS1.3 and others moved from TLS1.2 to TLS1.3, thereby giving the appearance of limited growth of TLS1.3.

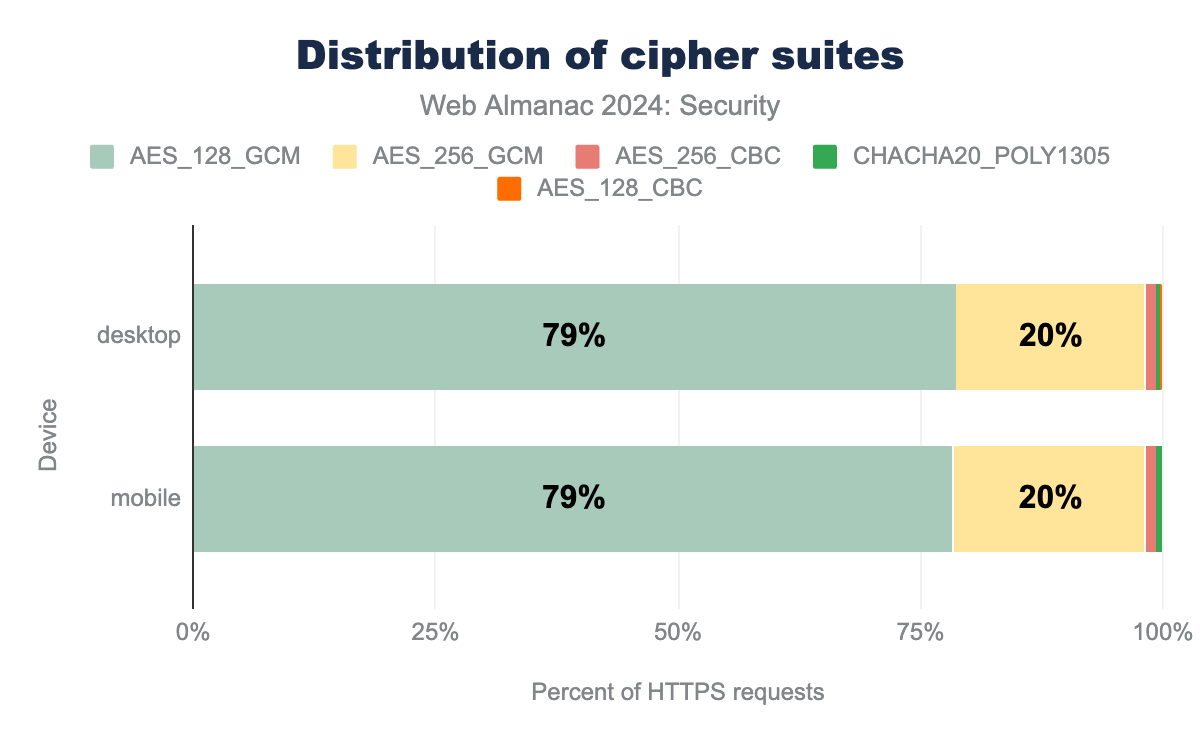

Cipher suites

Before client and server can communicate, they have to agree upon the cryptographic algorithms, known as cipher suites, to use. Like last time, over 98% of requests are served using a Galois/Counter Mode (GCM) cipher, which is considered the most secure option, due to them not being vulnerable to padding attacks. Also unchanged is the almost 79% of requests using a 128-bit key, which is still considered a secure key-length for AES in GCM mode. There are only a handful of suites used on the visited pages. TLS1.3 only supports GCM and other modern block cipher modes, which also simplifies its cipher suite ordering.

AES_128_GCM being the most common and used by 79% of desktop and 79% of mobile sites, AES_256_GCM is used by 20% of desktop and 20% of mobile sites, AES_256_CBC is used by 1% of desktop sites and 1% of mobile sites, CHACHA20_POLY1305 is used by 0% and 0% of sites respectively, AES_128_CBC is used by 0% and 0% respectively.TLS1.3 makes forward secrecy required, which means it is highly supported on the web. Forward Secrecy is a feature that assures that in case a key in use is leaked, it cannot be used to decrypt future or past messages sent over a connection. This is important to ensure that adversaries storing long-term traffic cannot decrypt the entire conversation as soon as they are able to leak a key. Interestingly, the use of forward secrecy dropped by almost 2% this year, to 95%.

Certificate Authorities

In order to use TLS, servers must first get a certificate they can host, which is created by a Certificate Authority (CA). By retrieving a certificate from one of the trusted CAs, the certificate will be recognized by the browser, thus allowing the user to use the certificate and therefore TLS for their secure communication.

| Issuer | Desktop | Mobile |

|---|---|---|

| R3 | 44.3% | 45.1% |

| GTS CA 1P5 | 6.1% | 6.6% |

| E1 | 4.2% | 4.3% |

| Sectigo RSA Domain Validation Secure Server CA | 3.3% | 3.1% |

| R10 | 2.6% | 2.8% |

| R11 | 2.6% | 2.8% |

| Go Daddy Secure Certificate Authority - G2 | 2.0% | 1.7% |

| cPanel, Inc. Certification Authority | 1.7% | 1.8% |

| Cloudflare Inc ECC CA-3 | 1.5% | 1.3% |

| Amazon RSA 2048 M02 | 1.4% | 1.3% |

R3 (an intermediate certificate from Let’s Encrypt) still leads the charts, although usage dropped compared to last year. Also from Let’s Encrypt are the E1, R10 and R11 intermediary certificates that are rising in percentage of websites using them.

R3 and E1 were issued in 2020 and are only valid for 5 years, which means it will expire in September 2025. Around a year before the expiry of intermediate certificates, Let’s Encrypt issues new intermediates that will gradually take over from the older ones. This March, Let’s Encrypt issued their new intermediates, which include R10 and R11 that are only valid for 3 years. These latter two certificates will take over from R3 directly, which should be reflected in next year’s Almanac.

Along with the rise in the number of Let’s Encrypt issued certificates, other current top 10 providers have seen a decrease in their share of certificates issued, except for GTS CA 1P5 that rose from close to 0% to over 6.5% on mobile. Of course it is possible that at the time of our analysis a CA was in the process of switching intermediate certificates, which could mean they serve a larger percentage of sites than reflected.

When we sum together the use of all certificates of Let’s Encrypt, we find that they issue over 56% of the certificates currently in use.

HTTP Strict Transport Security

HTTP Strict Transport Security (HSTS) is a response header that a server can use to communicate to the browser that only HTTPS should be used to reach pages hosted on this domain, instead of first reaching out over HTTP and following a redirect.

Currently, 30% of responses on mobile have a HSTS header, which is a 5% increase compared to 2022. Users of the header can communicate directives to the browser by adding them to the header value. The max-age directive is obligated. It indicates to the browser the time it should continue to only visit the page over HTTPS in seconds.

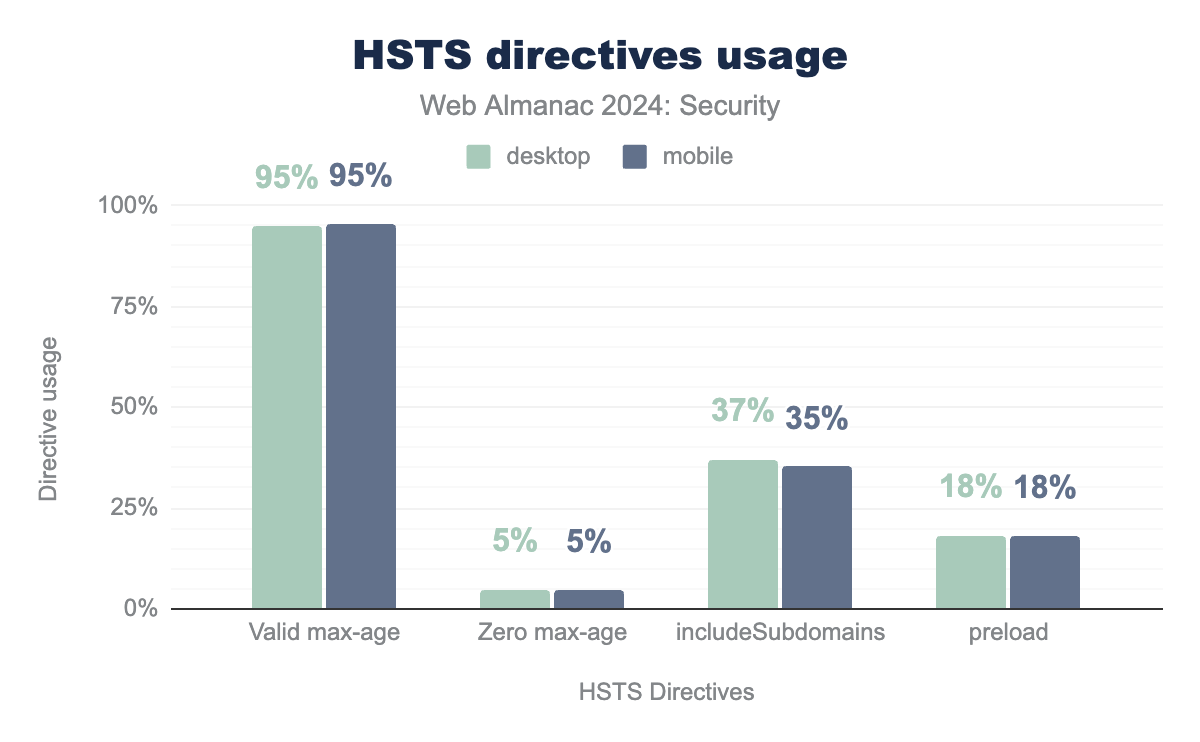

Zero max-age is used in 5% of websites in both desktop and mobile. Valid max-age is used in 95% of websites in both desktop and mobile. includeSubdomains is used in 37% of websites in desktop and 35% of websites in mobile. preload is used in 18% of websites in both mobile and desktop.The share of requests with a valid max-age has remained unchanged at 95%. The other , optional, directives (includeSubdomains and preload) both see a slight increase of 1% compared to 2022 to 35% and 18% on mobile respectively. The preload directive, which is not part of the HSTS specification, requires the includeSubdomains to be set and also requires a max-age larger than 1 year (or 31,536,000 seconds).

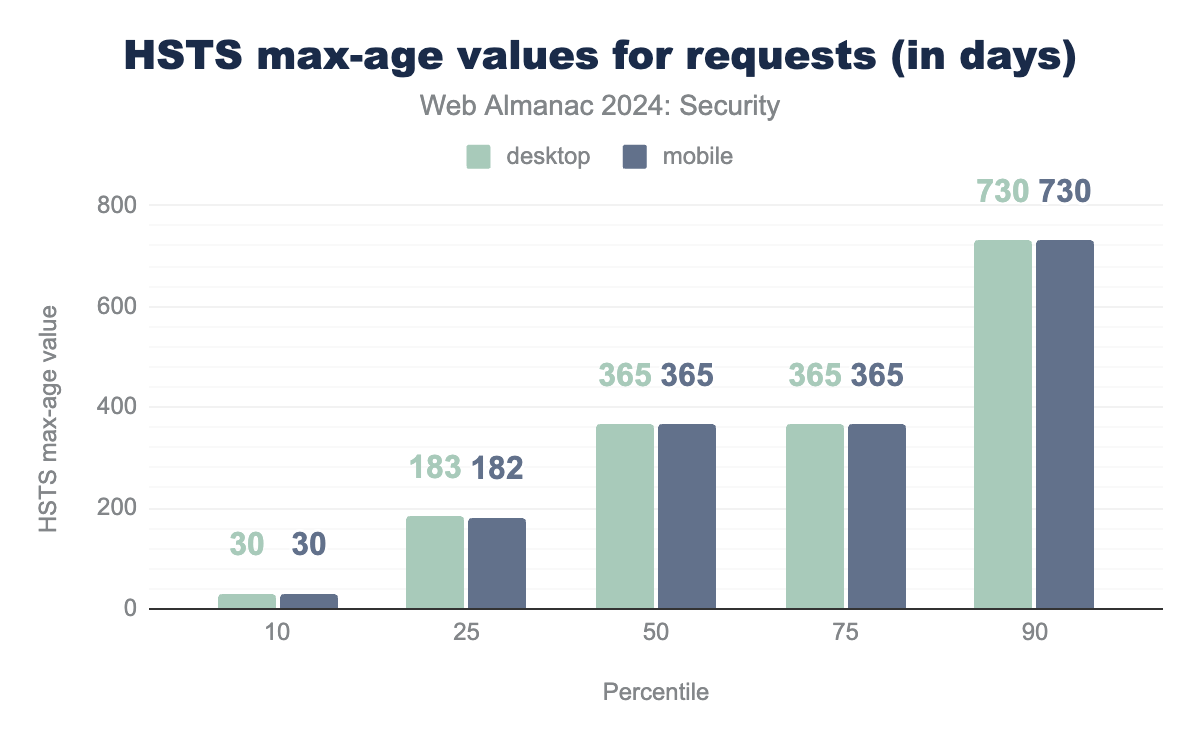

The distribution of valid max-age values has remained almost the same as in 2022, with the exception that the 10th percentile on mobile has decreased from 72 to 30 days. The median value of max-age remains at 1 year.

Cookies

Websites can store small pieces of data in a user’s browser by setting an HTTP cookie. Depending on the cookie’s attributes, it will be sent with every subsequent request to that website. As such, cookies can be used for purposes of implicit authentication, tracking or storing user preferences.

When cookies are used for authenticating users, it is paramount to protect them from abuse. For instance, if an adversary gets ahold of a user’s session cookie, they could potentially log into the victim’s account.

To protect their user’s from attacks like Cross-Site Request Forgery (CSRF), session hi-jacking, Cross-Site Script Inclusion (XSSI) and Cross-Site Leaks, websites are expected to securely configure authentication cookies.

Cookie attributes

The three cookie attributes outlined below enhance the security of authentication cookies against the attacks mentioned earlier. Ideally, developers should consider using all attributes, as they provide complementary layers of protection.

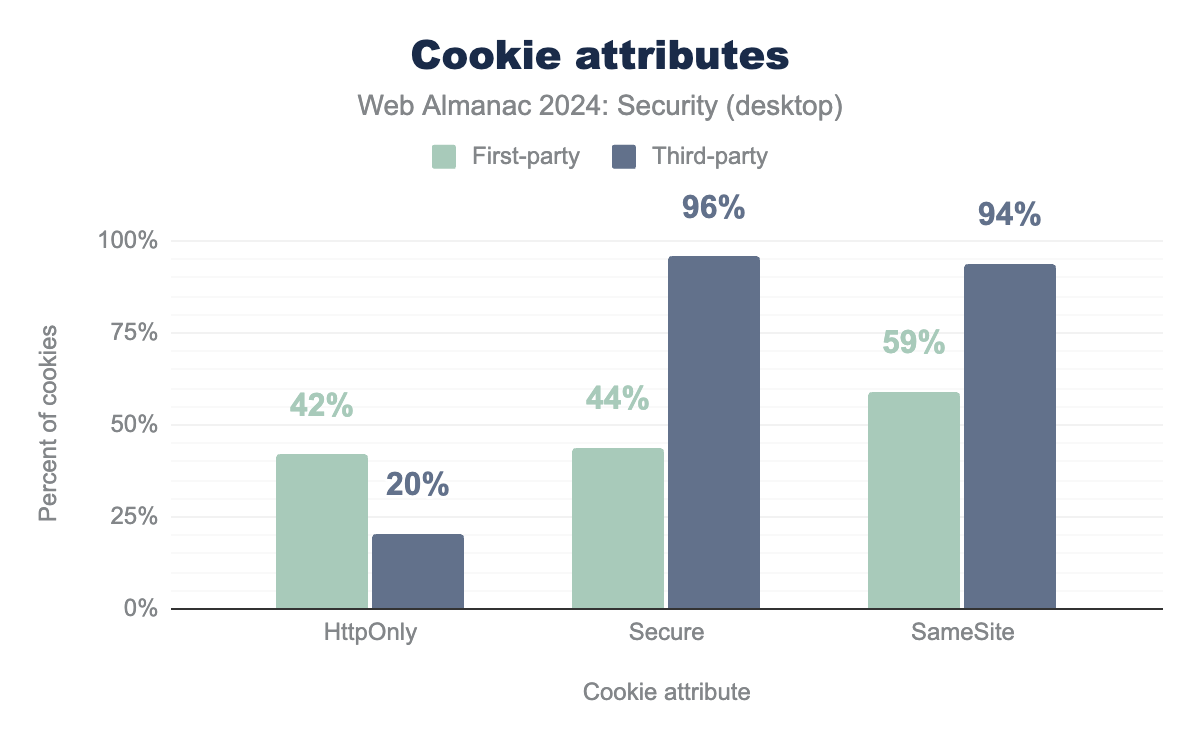

HttpOnly is used by 42%, Secure by 44%, and SameSite by 59%, while for third-party HttpOnly is used by 20%, Secure by 96%, and SameSite by 94%.

HttpOnly

By setting this attribute, the cookie is not allowed to be accessed or manipulated through the JavaScript document.cookie API. This prevents a Cross-Site Scripting (XSS) attack from gaining access to cookies containing secret session tokens.

With 42% of cookies having the HttpOnly attribute in a first-party context on desktop, the usage has risen by 6% compared to 2022. As for third-party requests, the usage has decreased by 1%.

Secure

Browsers only transmit cookies with the Secure attribute over secure, encrypted channels, such as HTTPS, and not over HTTP. This ensures that man-in-the-middle attackers cannot intercept and read sensitive values stored in cookies.

The use of the Secure attribute has been steadily increasing over the years. Since 2022, an additional 7% of cookies in first-party contexts and 6% in third-party contexts have been configured with this attribute. As discussed in previous editions of the Security chapter, the significant difference in adoption between the two contexts is largely due to the requirement that third-party cookies with SameSite=None must also be marked as Secure. This highlights that additional security prerequisites for enabling desired non-default functionality are an effective driver for the adoption of security features.

SameSite

The most recently introduced cookie attribute, SameSite, allows developers to control whether a cookie is allowed to be included in third-party requests. It is intended as an additional layer of defense against attacks like CSRF.

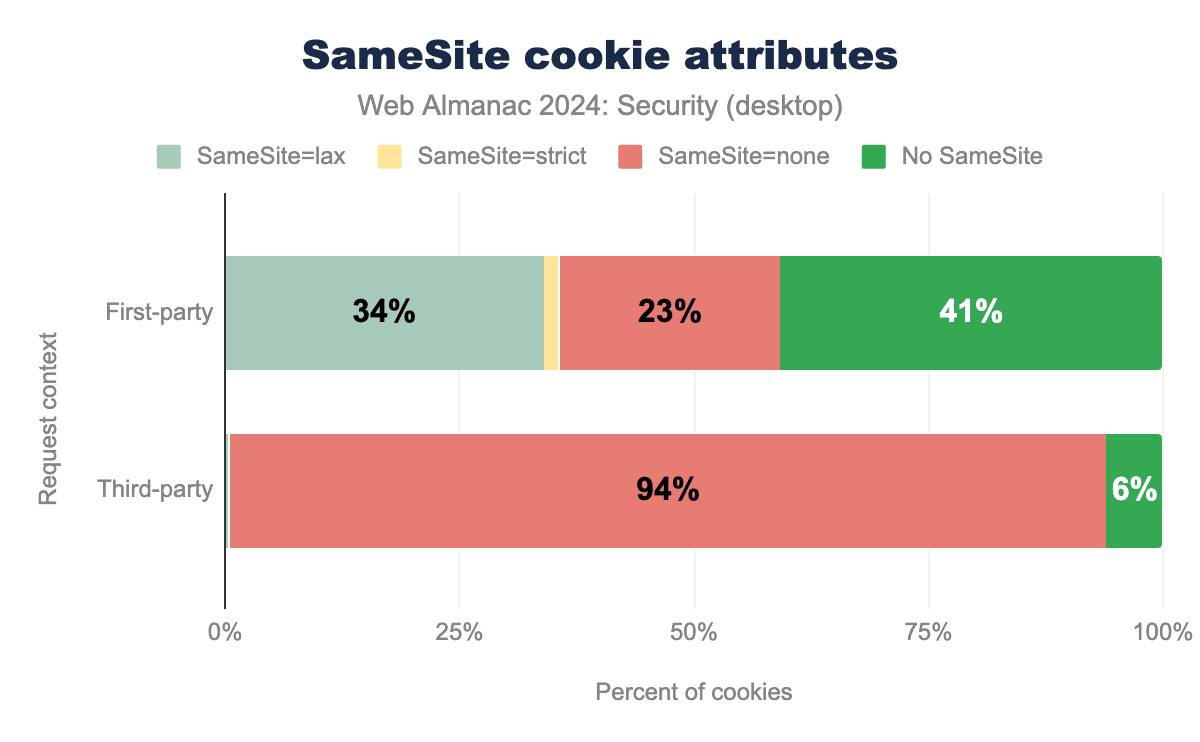

The attribute can be set to one of three values: Strict, Lax, or None. Cookies with the Strict value are completely excluded from cross-site requests. When set to Lax, cookies are only included in third-party requests under specific conditions, such as navigational GET requests, but not POST requests. By setting SameSite=none, the cookie bypasses the same-site policy and is included in all requests, making it accessible in cross-site contexts.

SameSite=lax is used by 34%, SameSite=strict by 2%, SameSite=none by 23% and 41% had no SameSite attribute, while for third-party SameSite=lax is used by 0.4%, SameSite=none by 94% and 6% had no SameSite attribute.While the relative number of cookies with a SameSite attribute has increased compared to 2022, this rise is largely attributable to cookies being explicitly excluded from the same-site policy by setting SameSite=None.

It’s important to note that all cookies without a SameSite attribute are treated as SameSite=Lax by default. Consequently, a total of 75% of cookies set in a first-party context are effectively treated as if they were set to Lax.

Prefixes

Session fixation attacks can be mitigated by using cookie prefixes like __Secure- and __Host-. When a cookie name starts with __Secure-, the browser requires the cookie to have the Secure attribute and to be transmitted over an encrypted connection. For cookies with the __Host- prefix, the browser additionally mandates that the cookie includes the Path attribute set to / and excludes the Domain attribute. These requirements help protect cookies from man-in-the-middle attacks and threats from compromised subdomains.

| Type of cookie | __Secure |

__Host |

|---|---|---|

| First-party | 0.05% | 0.17% |

| Third-party | 0.00% | 0.04% |

__Secure- and __Host- prefixes (desktop).

The adoption of cookie prefixes remains low, with less than 1% of cookies using these prefixes on both desktop and mobile platforms. This is particularly surprising given the high adoption rate of cookies with the Secure attribute, the only prerequisite for cookies prefixed with __Secure-. However, changing a cookie’s name can require significant refactoring, which is presumably a reason why developers tend to avoid this.

Cookie age

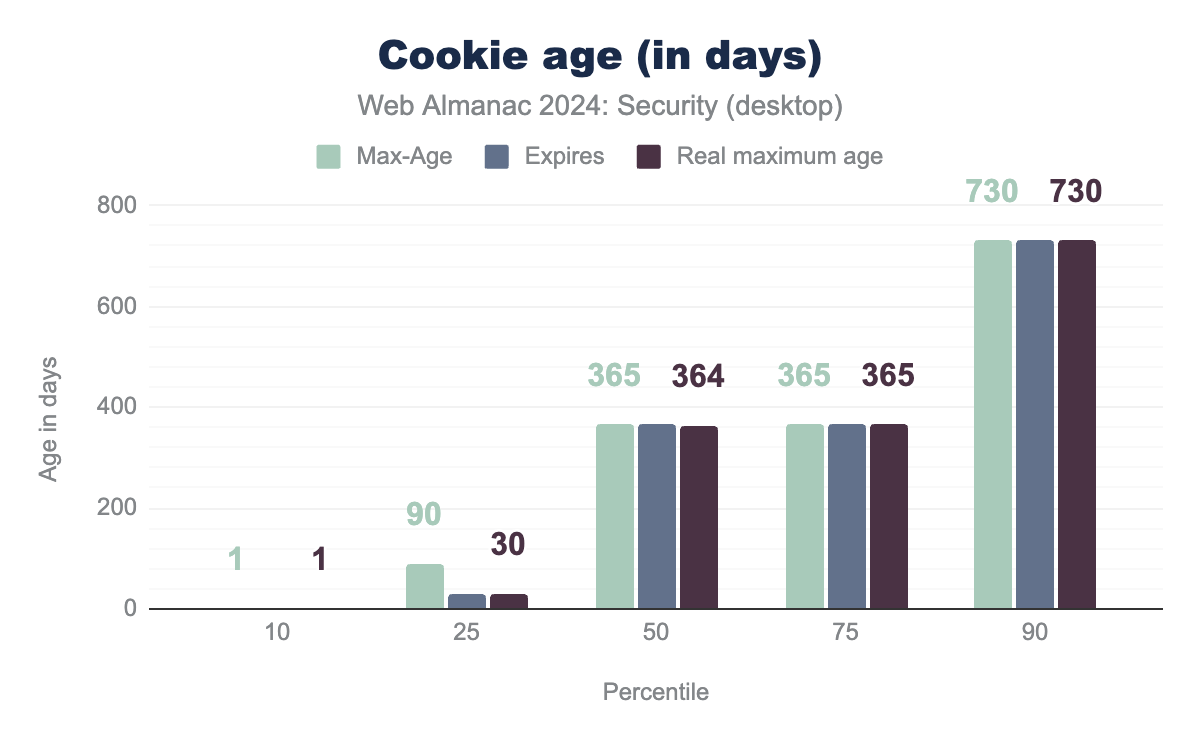

Websites can control how long browsers store a cookie by setting its lifespan. Browsers will discard cookies when they reach the age specified by the Max-Age attribute or when the timestamp defined in the Expires attribute is reached. If neither attribute is set, the cookie is considered a session cookie and will be removed when the session ends.

Max-Age, Expires and real age of 1 day. At the 25th percentile a value of 90 days and 60 days for Max-Age and Expires respectively, the real age is 60 days. At the 50th percentile a value of 365 days for Max-Age, Expires and 364 for the real age. At the 75th percentile a value of 365 days for Max-Age, Expires and the real age. At the 90th percentile a value of 730 days for Max-Age, Expires and the real age.The distribution of cookie ages has remained largely unchanged compared to lat year. However, since then, the cookie standard working draft has been updated, capping the maximum cookie age to 400 days. This change has already been implemented in Chrome and Safari. Based on the percentiles shown above, in these browsers, more than 10% of all observed cookies have their age capped to this 400-day limit.

Content inclusion

Content inclusion is a foundational aspect of the Web, allowing resources like CSS, JavaScript, fonts, and images to be shared via CDNs or reused across multiple websites. However, fetching content from external or third-party sources introduces significant risks. By referencing resources outside your control, you are placing trust in those third parties, which could either turn malicious or be compromised. This can lead to so-called supply-chain attacks, like the recent polyfill incident where compromised resources affected hundreds of thousands of websites. Therefore, security policies that govern content inclusion are essential for protecting web applications.

Content Security Policy

Websites can exert greater control over their embedded content by deploying a Content Security Policy (CSP) through either the Content-Security-Policy response header or by defining the policy in a <meta> tag. The wide range of directives available in CSP allows websites to specify, in a fine-grained manner, which resources can be fetched and from which origins.

In addition to vetting included content, CSP can serve other purposes as well, such as enforcing the use of encrypted channels with the upgrade-insecure-requests directive and controlling where the site can be embedded to protect against clickjacking attacks using the frame-ancestors directive.

The adoption rate of CSP headers increased from 15% of all hosts in 2022 to 19% this year. This amounts to a relative increase of 27%. Over these two years, the relative increase was 12% between 2022 and 2023, and 14% between 2023 and 2024.

Looking back, overall CSP adoption was only at 12% of hosts in 2021, so it’s encouraging to see that growth has remained steady. If this trend continues, projections suggest that CSP adoption will surpass the 20% mark in next year’s Web Almanac.

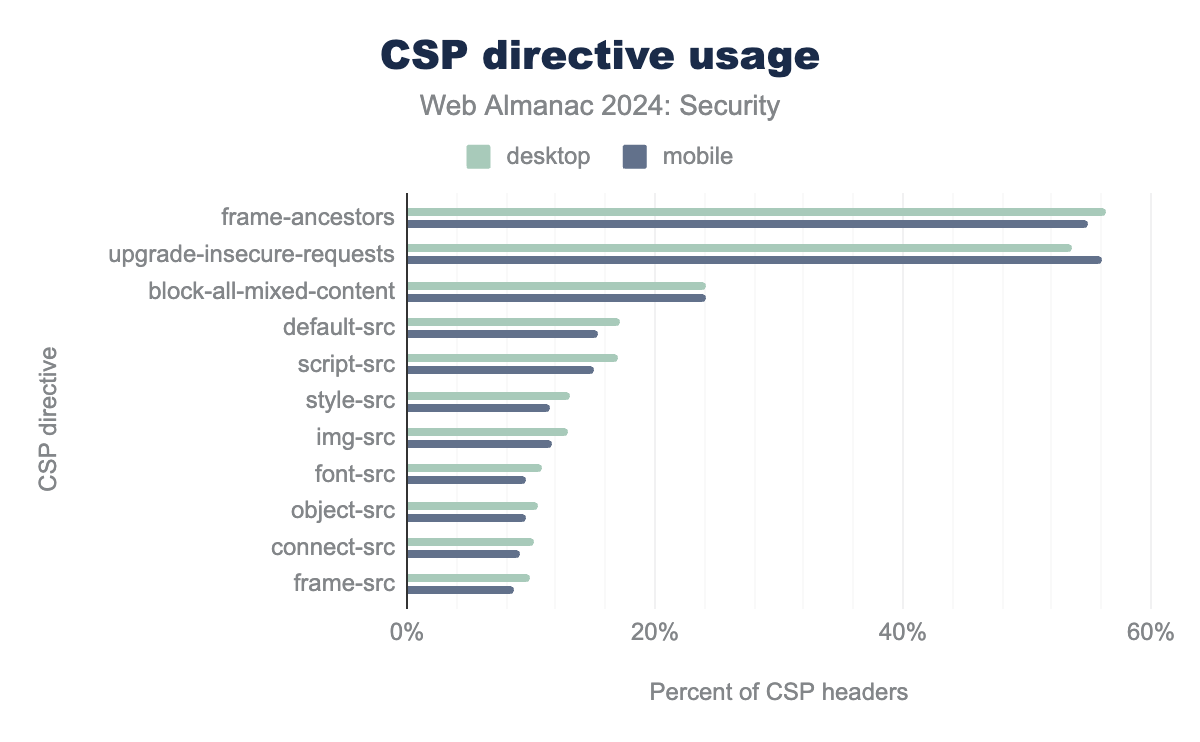

Directives

Most websites utilize CSP for purposes beyond controlling embedded resources, with the upgrade-insecure-requests and frame-ancestors directives being the most popular.

frame-ancestors is the most common with 56% in desktop and 55% in mobile, followed by upgrade-insecure-requests which is 54% in desktop and 56% in mobile. block-all-mixed-content is 24% in desktop and 24% in mobile, default-src is 17% in desktop and 15% in mobile, script-src is 17% in desktop and 15% in mobile, style-src is 13% in desktop and 12% in mobile, img-src is 13% in desktop and 12% in mobile, font-src is 11% in desktop and 10% in mobile, connect-src is 10% in desktop and 9% in mobile, frame-src is 10% in desktop and 9% in mobile.The block-all-mixed-content directive, which has been deprecated in favor of upgrade-insecure-requests, is the third most used directive. Although we observed a relative decrease of 12.5% for desktop and 13.8% for mobile in its usage between 2020 and 2021, the decline has since slowed to an average yearly decrease of 4.4% for desktop and 6.4% for mobile since 2022.

| Policy | Desktop | Mobile |

|---|---|---|

| upgrade-insecure-requests; | 27% | 30% |

| block-all-mixed-content; frame-ancestors ’none’; upgrade-insecure-requests; | 22% | 22% |

| frame-ancestors ’self’; | 11% | 10% |

The top three directives also make up the building blocks of the most prevalent CSP definitions. The second most commonly used CSP definition includes both block-all-mixed-content and upgrade-insecure-requests. This suggests that many websites use block-all-mixed-content for backward compatibility, as newer browsers will ignore this directive if upgrade-insecure-requests is present.

| Directive | Desktop | Mobile |

|---|---|---|

upgrade-insecure-requests |

-1% | 0% |

frame-ancestors |

5% | 3% |

block-all-mixed-content |

-9% | -13% |

default-src |

-9% | -6% |

script-src |

-3% | -2% |

style-src |

-8% | -2% |

img-src |

-3% | 9% |

font-src |

-4% | 8% |

connect-src |

3% | 17% |

frame-src |

4% | 16% |

object-src |

16% | 17% |

All other directives shown in the table above are used for content inclusion control. Overall, usage has remained relatively stable. However, a notable change is the increased use of the object-src directive, which has surpassed connect-src and frame-src. Since 2022, the usage of object-src has risen by 15.9% for desktop and 16.8% for mobile.

Among the most notable decreases in usage is default-src, the catch-all directive. This decline could be explained by the increasing use of CSP for purposes beyond content inclusion, such as enforcing HTTP upgrades to HTTPS or controlling the embedding of the current page – situations where default-src is not applicable, as these directives don’t fallback to it. This change in CSP purpose is confirmed by the most prevalent CSP headers listed in Figure 17, which all have seen an increase in usage since 2022. However, directives like upgrade-insecure-requests and block-all-mixed-content, while part of these most common CSP headers, are being used less overall, as seen in Figure 18.

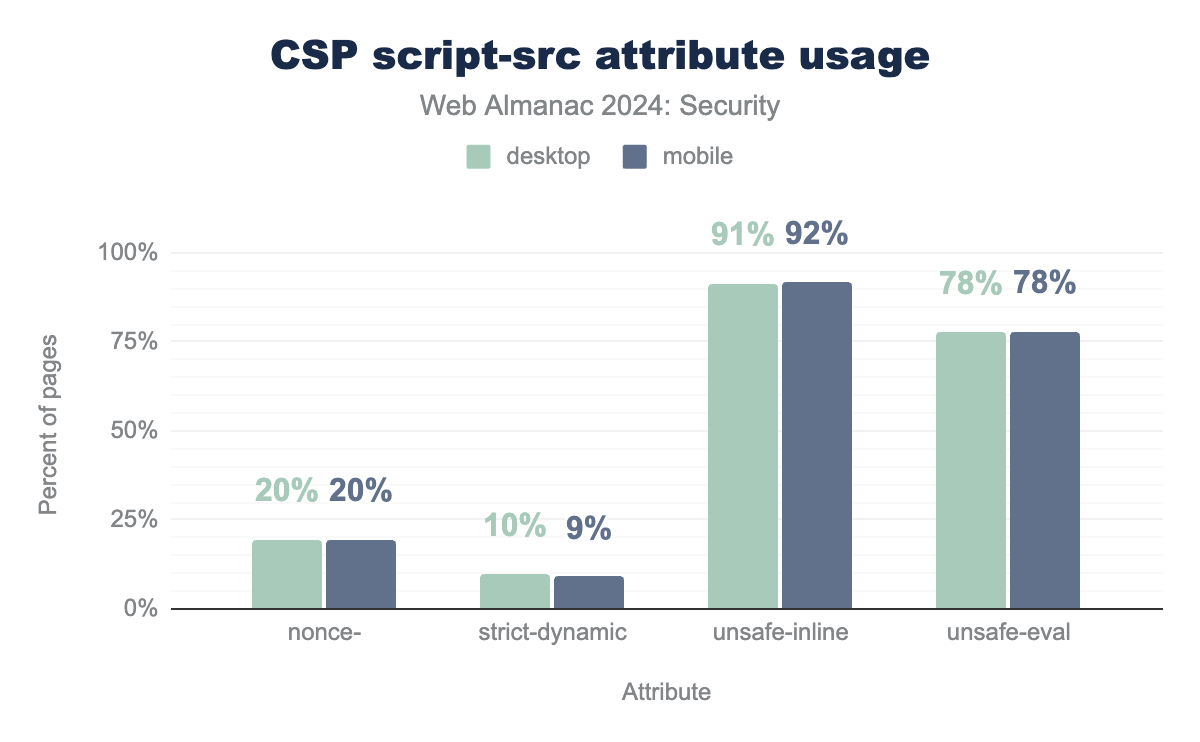

Keywords for script-src

One of the most important directives of CSP is script-src, as curbing scripts loaded by the website hinders potential adversaries greatly. This directive can be used with several attribute keywords.

nonce-, strict-dynamic, unsafe-inline and unsafe-eval in the CSP script-src directive. nonce- is used by 20% of websites with a CSP for desktop and mobile. strict-dynamic is used by 10% of websites with a CSP for desktop and 9% for mobile. unsafe-inline is used by 91% of websites with a CSP for desktop and 92% for mobile. unsafe-eval is used by 78% of websites with a CSP for desktop and mobile.script-src keywords.

The unsafe-inline and unsafe-eval directives can significantly reduce the security benefits provided by CSP. The unsafe-inline directive permits the execution of inline scripts, while unsafe-eval allows the use of the eval JavaScript function. Unfortunately, the use of these insecure practices remains widespread, demonstrating the challenges of avoiding use of inline scripts and use of the eval function.

| Keyword | Desktop | Mobile |

|---|---|---|

nonce- |

62% | 39% |

strict-dynamic |

61% | 88% |

unsafe-inline |

-3% | -3% |

unsafe-eval |

-3% | 0% |

script-src keywords.

However, the increasing adoption of the nonce- and strict-dynamic keywords is a positive development. By using the nonce- keyword, a secret nonce can be defined, allowing only inline scripts with the correct nonce to execute. This approach is a secure alternative to the unsafe-inline directive for permitting inline scripts. When used in combination with the strict-dynamic keyword, nonced scripts are permitted to import additional scripts from any origin. This approach simplifies secure script loading for developers, as it allows them to trust a single nonced script, which can then securely load other necessary resources.

Allowed hosts

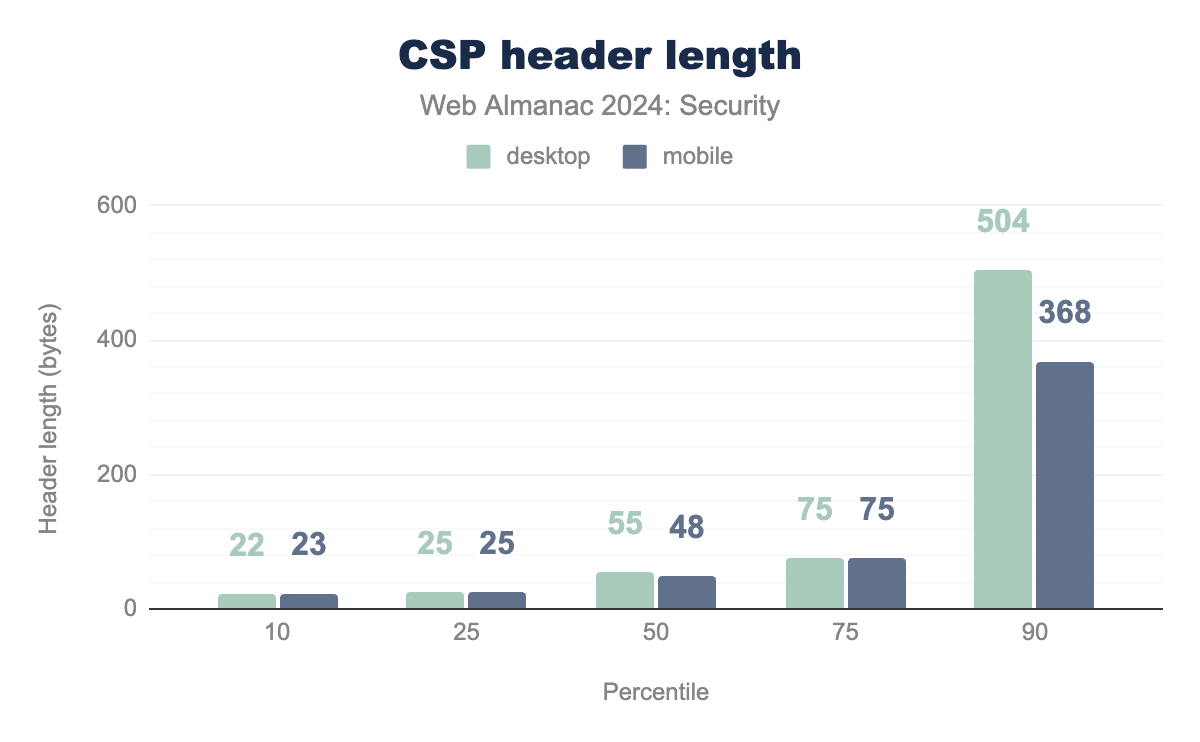

CSP is often regarded as one of the more complex security policies, partly due to the detailed policy language, providing fine-grained control over resource inclusion.

Reviewing the observed CSP header lengths, we find that 75% of all headers are 75 bytes or shorter. For context, the longest policy shown in Figure 17 is also 75 bytes. At the 90th percentile, desktop policies reach 504 bytes and mobile policies 368 bytes, indicating that many websites find it necessary to implement relatively lengthy Content Security Policies. However, when analyzing the distribution of unique allowed hosts across all policies, the 90th percentile shows just 2 unique hosts.

The highest number of unique allowed hosts in a policy was 1,020, while the longest Content Security Policy header reached 65,535 bytes. However, this latter header is inflated by a large number of repeated , characters for unknown reasons. The second longest CSP header, which is valid, spans 33,123 bytes. This unusually large size is due to hundreds of occurrences of the adservice.google domain, each with variations in the top-level domain. Excerpt:

adservice.google.com adservice.google.ad adservice.google.ae …This suggests that the long tail of excessively large CSP headers is likely caused by computer-generated exhaustive lists of origins. Although this may seem like a specific edge case, it highlights a limitation of CSP: the lack of regex functionality, which could otherwise provide a more efficient and elegant solution to handle such cases. However, depending on the websites implementation, this issue could also be solved by employing the strict-dynamic and nonce- keyword in the script-src directive, which enables the allowed script with nonce to load additional scripts.

The most common HTTPS origins included in CSP headers are used for loading fonts, ads and other media fetched from CDNs:

| Host | Desktop | Mobile |

|---|---|---|

https://www.googletagmanager.com |

0.41% | 0.32% |

https://fonts.gstatic.com

|

0.34% | 0.27% |

https://fonts.googleapis.com |

0.33% | 0.27% |

https://www.google-analytics.com |

0.33% | 0.26% |

https://www.google.com |

0.30% | 0.26% |

https://www.youtube.com |

0.26% | 0.23% |

https://*.google-analytics.com |

0.25% | 0.23% |

https://connect.facebook.net |

0.20% | 0.19% |

https://*.google.com |

0.19% | 0.19% |

https://*.googleapis.com |

0.19% | 0.19% |

As for WSS origins, used for allowing WebSocket connections to certain origins, the following were found the most common:

| Host | Desktop | Mobile |

|---|---|---|

wss://*.intercom.io |

0.08% | 0.08% |

wss://*.hotjar.com |

0.08% | 0.07% |

wss://www.livejournal.com |

0.05% | 0.06% |

wss://*.quora.com |

0.04% | 0.06% |

wss://*.zopim.com |

0.03% | 0.02% |

Two of these origins are related to customer service and ticketing (intercom.io, zopim.com), one is used for website analytics (hotjar.com), and two are associated with social media (www.livejournal.com, quora.com). For four out of these five websites, we found specific instructions on how to add the origin to the website’s content security policy. This is considered good practice, as it discourages website administrators from using wildcards to allow third-party resources, which would reduce security by allowing broader access than necessary.

Subresource Integrity

While CSP is a powerful tool for ensuring that resources are only loaded from trusted origins, there remains a risk that those resources could be tampered with. For instance, a script might be loaded from a trusted CDN, but if that CDN suffers a security breach and its scripts are compromised, any website using one of those scripts could become vulnerable as well.

Subresource Integrity (SRI) provides a safeguard against this risk. By using the integrity attribute in <script> and <link> tags, a website can specify the expected hash of a resource. If the hash of the received resource does not match the expected hash, the browser will refuse to render the resource, thereby protecting the website from potentially compromised content.

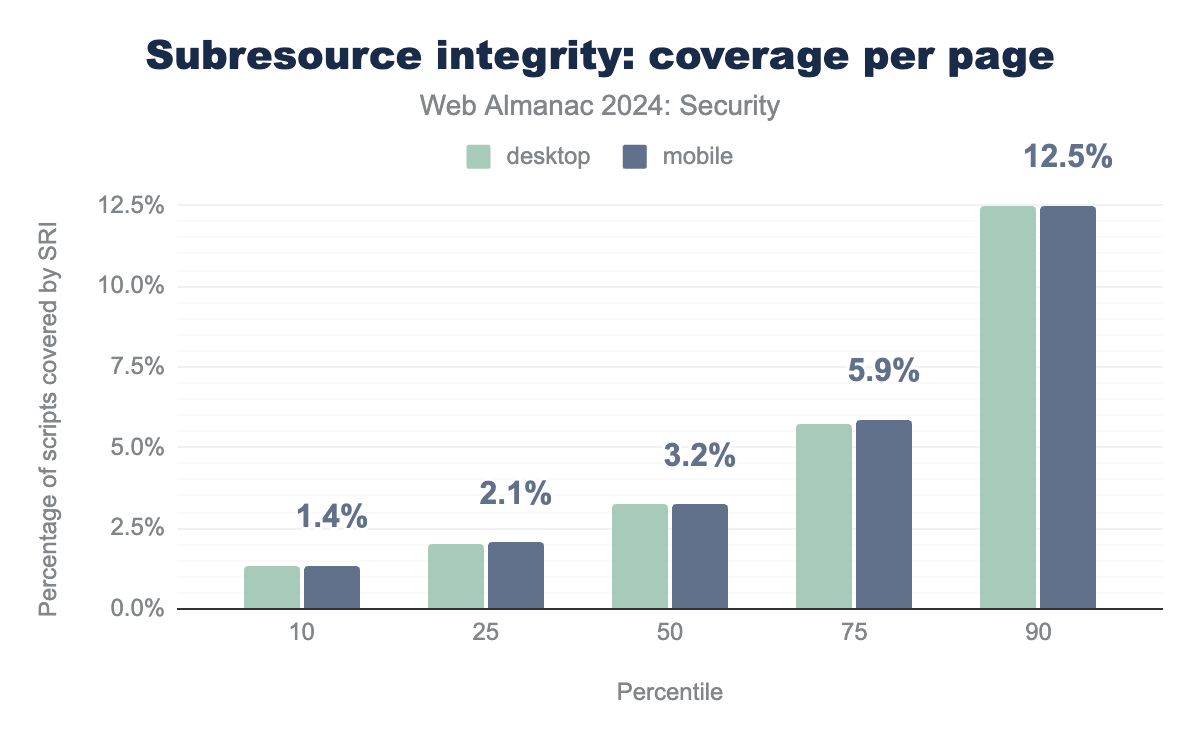

SRI is used by 23.2% and 21.3% of all observed pages for desktop and mobile respectively. This amounts to a relative change in adoption of 13.3% and 18.4% respectively.

The adoption of Subresource Integrity seems to be stagnating, with the median percentage of scripts per page checked against a hash remaining at 3.23% for both desktop and mobile. This figure has remained virtually unchanged since 2022.

| Host | Desktop | Mobile |

|---|---|---|

www.gstatic.com |

35% | 35% |

cdnjs.cloudflare.com |

7% | 7% |

cdn.userway.org |

6% | 6% |

static.cloudflareinsights.com |

6% | 6% |

code.jquery.com |

5% | 6% |

cdn.jsdelivr.net |

4% | 4% |

d3e54v103j8qbb.cloudfront.net |

2% | 2% |

t1.daumcdn.net |

2% | 1% |

Most of the hosts from which resources are fetched and protected by SRI are CDNs. A notable difference from 2022’s data is the absence of cdn.shopify.com from the top hosts list (previously 22% on desktop and 23% on mobile). This is due to Shopify having dropped SRI in favor of similar functionality provided by the integrity attribute of importmap, which they explain in a blogpost.

Permissions Policy

The Permissions Policy (formerly known as the Feature Policy) is a set of mechanisms that allow websites to control which browser features can be accessed on a webpage, such as geolocation, webcam, microphone, and more. By using the Permissions Policy, websites can restrict feature access for both the main site and any embedded content, enhancing security and protecting user privacy. This is configured through the Permissions-Policy response header for the main site and all its embedded <iframe> elements,. Additionally, web administrators can set individual policies for specific <iframe> elements using their allow attribute.

Permissions-Policy header from 2022.

In 2022, the adoption of the Permissions-Policy header saw a significant relative increase of 85%. However, from 2022 to this year, the growth rate has drastically slowed to just 1.3%. This is expected, as the Feature Policy was renamed to Permissions Policy at the end of 2020, resulting in an initial peak. In the following years, growth has remained very low since the header is still supported exclusively by Chromium-based browsers.

| Header | Desktop | Mobile |

|---|---|---|

interest-cohort=() |

21% | 21% |

geolocation=(),midi=(),sync-xhr=(),microphone=(),camera=(),magnetometer=(),gyroscope=(),fullscreen=(self),payment=() |

5% | 6% |

accelerometer=(), autoplay=(), camera=(), cross-origin-isolated=(), display-capture=(self), encrypted-media=(), fullscreen=*, geolocation=(self), gyroscope=(), keyboard-map=(), magnetometer=(), microphone=(), midi=(), payment=*, picture-in-picture=(), publickey-credentials-get=(), screen-wake-lock=(), sync-xhr=(), usb=(), xr-spatial-tracking=(), gamepad=(), serial=() |

4% | 4% |

accelerometer=(self), autoplay=(self), camera=(self), encrypted-media=(self), fullscreen=(self), geolocation=(self), gyroscope=(self), magnetometer=(self), microphone=(self), midi=(self), payment=(self), usb=(self) |

3% | 3% |

accelerometer=(), camera=(), geolocation=(), gyroscope=(), magnetometer=(), microphone=(), payment=(), usb=() |

3% | 3% |

browsing-topics=() |

3% | 3% |

geolocation=self |

2% | 2% |

Only 2.8% of desktop hosts and 2.5% of mobile hosts set the policy using the Permissions-Policy response header. The policy is primarily used to exclusively opt out of Google’s Federated Learning of Cohorts (FLoC); 21% of hosts that implement the Permissions-Policy header set the policy as interest-cohort=(). This usage is partly due to the controversy that FLoC sparked during its trial period. Although FLoC was ultimately replaced by the Topics API, the continued use of the interest-cohort directive highlights how specific concerns can shape the adoption of web policies.

All other observed headers with at least 2% of hosts implementing them, are aimed at restricting the permission capabilities of the website itself and/or its embedded <iframe> elements. Similar to the Content Security Policy, the Permissions Policy is “open by default” instead of “secure by default”; absence of the policy entails absence of protection. This approach aims to avoid breaking website functionality when introducing new policies. Notably, 0.28% of sites explicitly use the * wildcard policy, allowing the website and all embedded <iframe> elements (where no more restrictive allow attribute is present) to request any permission - though this is the default behavior when the Permissions Policy is not set.

The Permissions Policy can also be defined individually for each embedded <iframe> through its allow attribute. For example, an <iframe> can be permitted to use the geolocation and camera permissions by setting the attribute as follows:

<iframe src="https://example.com" allow="geolocation 'self'; camera *;"></iframe>Out of the 21.4 million <iframe> elements observed in the crawl, half included the allow attribute. This marks a significant increase compared to even just the previous month, when only 21% of <iframe> elements had the allow attribute - indicating that its usage has more than doubled in just one month. A plausible explanation for this rapid change is that one or several widely-used third-party services have propagated this update across their <iframe> elements. Given the ad-specific directives we now observe (displayed in the table below, row 1 and 3) - none of which were present in 2022 - it is likely that an ad service is responsible for this shift.

| Directive | Desktop | Mobile |

|---|---|---|

join-ad-interest-group |

43% | 44% |

attribution-reporting |

28% | 280% |

run-ad-auction |

25% | 24% |

encrypted-media |

19% | 18% |

autoplay |

18% | 18% |

picture-in-picture |

12% | 12% |

clipboard-write |

10% | 10% |

gyroscope |

9% | 10% |

accelerometer |

9% | 10% |

web-share |

7% | 7% |

allow attribute directives.

Compared to 2022, the top 10 most common directives are now led by three newly introduced directives: join-ad-interest-group, attribution-reporting and run-ad-auction. The first and third directives are specific to Google’s Privacy Sandbox. For all observed directives in the top 10, almost none were used in combination with an origin or keyword (i.e., 'src', 'self', and 'none'), meaning the loaded page is allowed to request the indicated permission regardless of its origin.

Iframe sandbox

Embedding third-party websites within <iframe> elements always carries risks, though it might be necessary to enrich a web application’s functionality. Website administrators should be aware that a rogue <iframe> can exploit several mechanisms to harm users, such as launching pop-ups or redirecting the top-level page to a malicious domain.

These risks can be curbed by employing the sandbox attribute on <iframe> elements. Doing this, the content loaded within is restricted to rules defined by the attribute, and can be used to prevent the embedded content from abusing capabilities. When provided with an empty string as value, the policy is strictest. However, this policy can be relaxed by adding specific directives, of which each has their own specific relaxation rules. For example, the following <iframe> would allow the embedded webpage to run scripts:

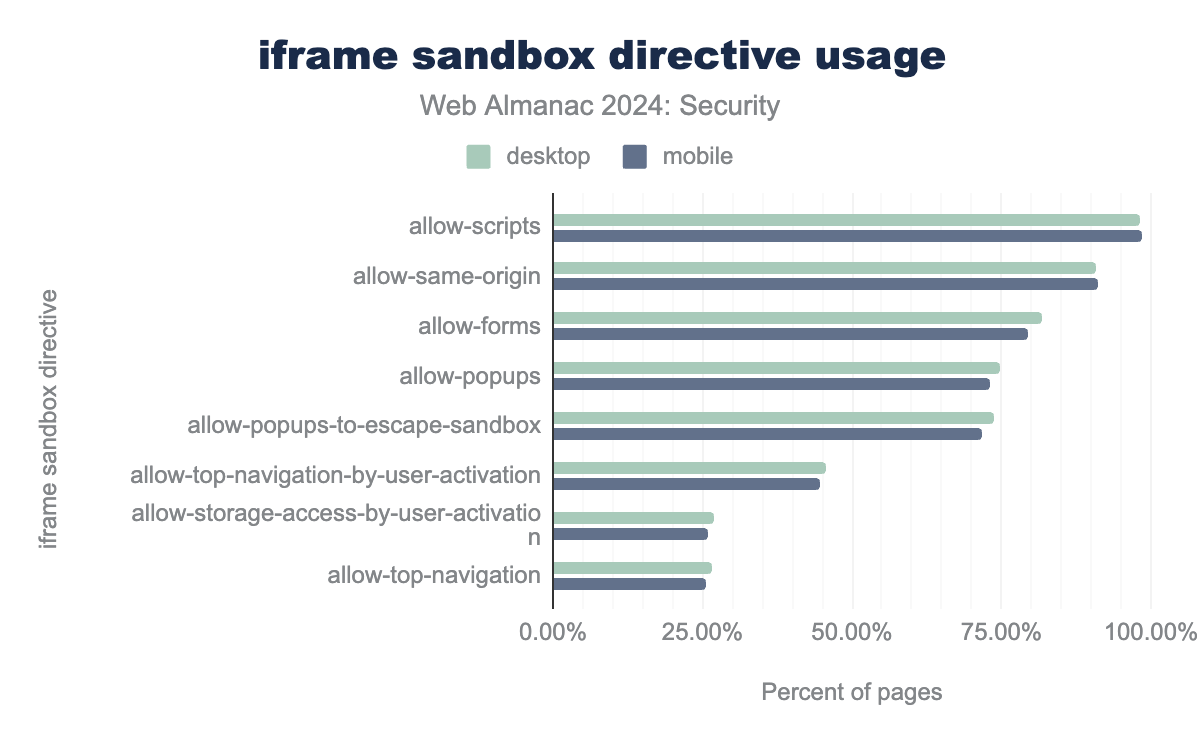

<iframe src="https://example.com" sandbox="allow-scripts"></iframe>The sandbox attribute was observed in 28.4% and 27.5% of <iframe> elements for desktop and mobile respectively, a considerable drop from the 35.2% and 32% reported in 2022. Much like the sudden spike in allow attribute usage mentioned in the previous section, this decline could be attributed to a change in the modus operandi of an embedded service, where the sandbox attribute was omitted from the template <iframe>.

allow-scripts was found in 98% of iframes with sandbox attribute for desktop and 99% for mobile, allow-same-origin in 91% for desktop and 91% for mobile, allow-forms in 82% for desktop and 80% for mobile, allow-popups in 75% for desktop and 73% for mobile, allow-popups-to-escape-sandbox in 74% for desktop and 72% for mobile, allow-top-navigation-by-user-activation in 46% for desktop and 45% for mobile, allow-storage-access-by-user-activation in 27% for desktop and 26% for mobile, and allow-top-navigation in 27% for desktop and 25,62% for mobile.More than 98% of pages that have the sandbox attribute set in an iframe, use it to allow scripts in the embedded webpage, using the allow-scripts directive.

Attack preventions

Web applications can be exploited in numerous ways, and while there are many methods to protect them, it can be difficult to see the full range of options. This challenge is heightened when protections are not enabled by default or require opt-in. In other words, website administrators must be aware of potential attack vectors relevant to their application and how to prevent them. Therefore, evaluating which attack prevention measures are in place is crucial for assessing the overall security of the Web.

Security header adoption

Most security policies are configured through response headers, which instruct the browser on which policies to enforce. Although not every security policy is relevant for every website, the absence of certain security headers suggests that website administrators may not have considered or prioritized security measures.

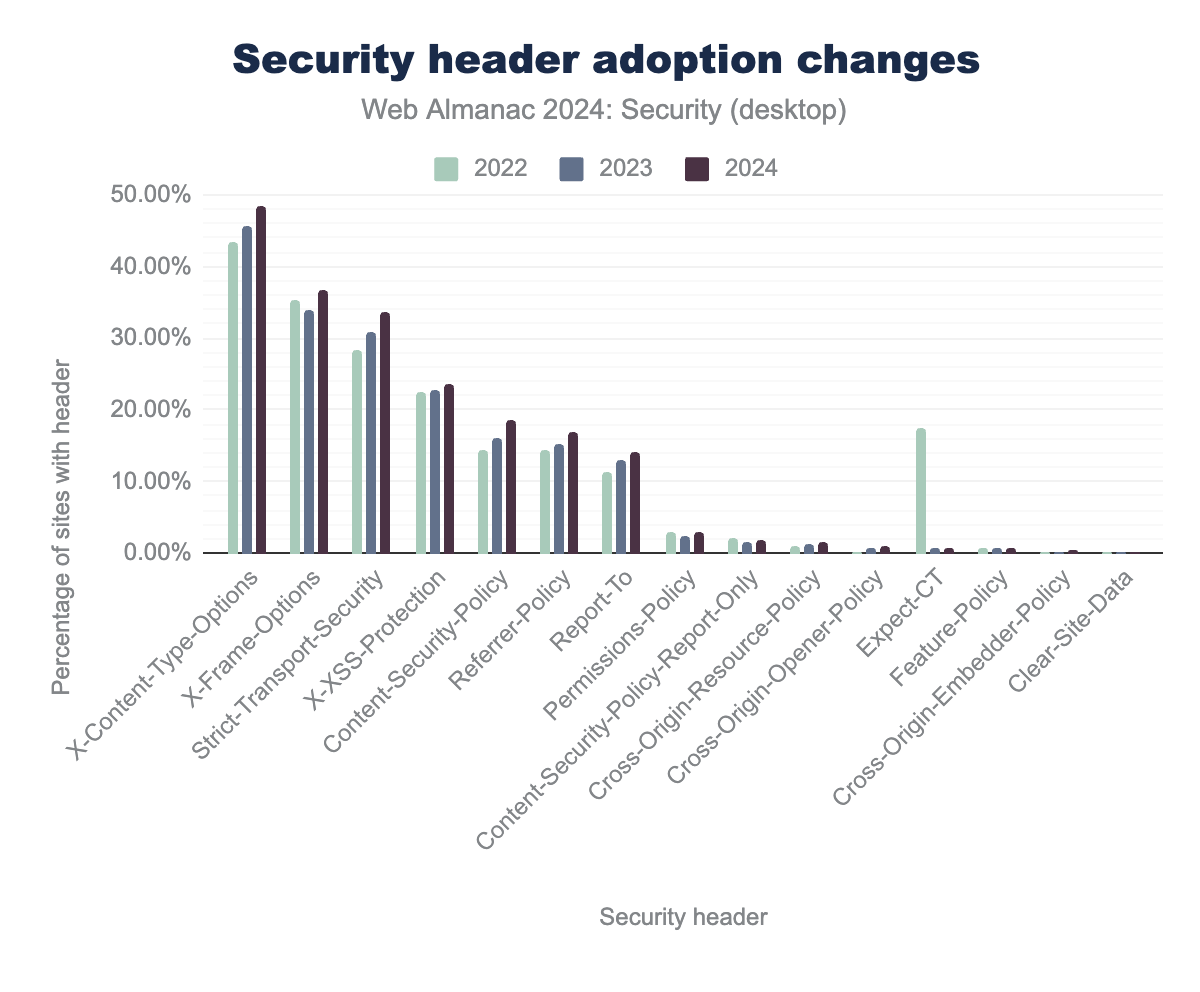

X-Content-Type-Options was found 43%, 46% and 48% respectively, X-Frame-Options was found in 35.3%, 34% and 37% respectively, Strict-Transport-Security was found in 28%, 31% and 34% respectively, X-XSS-Protection was found in 23%, 23% and 23% respectively, Content-Security-Policy was found in 14%, 16% and 19% respectively, Referrer-Policy was found in 14%, 15% and 17% respectively, Report-To was found in 11%, 13% and 14% respectively, Permissions-Policy was found in 2.82%, 2.45% and 2.82% respectively, Content-Security-Policy-Report-Only was found in 2.13%, 1.58% and 1.83% respectively, Cross-Origin-Resource-Policy was found in 1.03%, 1.31% and 1.52% respectively, Cross-Origin-Opener-Policy was found in 0.23%, 0.68% and 1.07% respectively, Expect-CT was found in 17%, 0.65% and 0.71%, Feature-Policy was found in 0.77%, 0.65% and 0.65% respectively, Cross-Origin-Embedder-Policy was found in 0.04%, 0.22% and 0.35% respectively, Clear-Site-Data was found in 0.01%, 0.01% and 0.02% respectively.

Over the past two years, three security headers have seen a decrease in usage. The most notable decline is in the Expect-CT header, which was used to opt into Certificate Transparency. This header is now deprecated because Certificate Transparency is enabled by default. Similarly, the Feature-Policy header has decreased in usage due to its replacement by the Permissions-Policy header. Lastly, the Content-Security-Policy-Report-Only header has also declined. This header was used primarily for testing and monitoring the impact of a Content Security Policy by sending violation reports to a specified endpoint. It’s important to note that the Report-Only header does not enforce the Content Security Policy itself, so its decline in usage does not indicate a reduction in security. Since none of these headers impact security, we can safely assume that the overall adoption of security headers continues to grow, reflecting a positive trend in web security.

The strongest absolute risers since 2022 are Strict-Transport-Security (+5.3%), X-Content-Type-Options (+4.9%) and Content-Security-Policy (+4.2%).

Preventing clickjacking with CSP and X-Frame-Options

As discussed previously, one of the primary uses of the Content Security Policy is to prevent clickjacking attacks. This is achieved through the frame-ancestors directive, which allows websites to specify which origins are permitted to embed their pages within a frame. There, we saw that this directive is commonly used to either completely prohibit embedding or restrict it to the same origin (Figure 17).

Another measure against clickjacking is the X-Frame-Options (XFO) header, though it provides less granular control compared to CSP. The XFO header can be set to SAMEORIGIN, allowing the page to be embedded only by other pages from the same origin, or DENY, which completely blocks any embedding of the page. As shown in the table below, most headers are configured to relax the policy by allowing same-origin websites to embed the page.

| Header | Desktop | Mobile |

|---|---|---|

SAMEORIGIN |

73% | 73% |

DENY |

23% | 24% |

X-Frame-Options header values.

Although deprecated, 0.6% of observed X-Frame-Options headers on desktop and 0.7% on mobile still use the ALLOW-FROM directive, which functions similarly to the frame-ancestors directive by specifying trusted origins that can embed the page. However, since modern browsers ignore X-Frame-Options headers containing the ALLOW-FROM directive, this could create gaps in the website’s clickjacking defenses. However, this practice may be intended for backward compatibility, where the deprecated header is used alongside a supported Content Security Policy that includes the frame-ancestors directive.

Preventing attacks using Cross-Origin policies

One of the core principles of the Web is the reuse and embedding of cross-origin resources. However, our security perspective on this practice has significantly shifted with the emergence of micro-architectural attacks like Spectre and Meltdown, and Cross-Site Leaks (XS-Leaks) that leverage side-channels to uncover potentially sensitive user information. These threats have created a growing need for mechanisms to control whether and how resources can be rendered by other websites, whilst ensuring better protection against these new exploits.

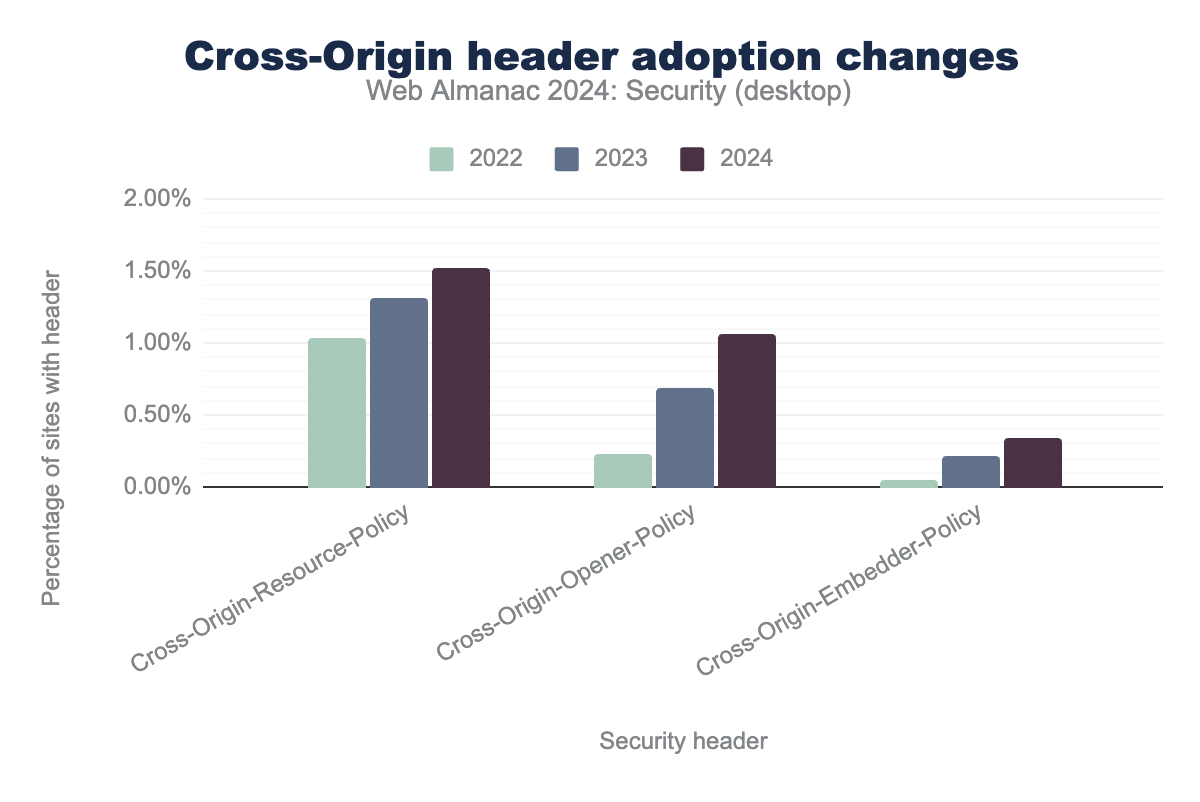

This demand led to the introduction of several new security headers collectively known as Cross-Origin policies: Cross-Origin-Resource-Policy (CORP), Cross-Origin-Embedder-Policy (COEP) and Cross-Origin-Opener-Policy (COOP). These headers provide robust countermeasures against side-channel attacks by controlling how resources are shared and embedded across origins. Adoption of these policies has been steadily increasing, with the use of Cross-Origin-Opener-Policy nearly doubling each year for the past two years.

Cross-Origin- headers in requests for the years 2022, 2023 and 2024. Cross-Origin-Resource-Policy was found in 1.03%, 1.31% and 1.52% respectively, Cross-Origin-Opener-Policy was found in 0.23%, 0.68% and 1.07% respectively, Cross-Origin-Embedder-Policy was found in 0.04%, 0.22% and 0.35% respectively, Clear-Site-Data was found in 0.01%, 0.01% and 0.02% respectively.Cross Origin Embedder Policy

The Cross Origin Embedder Policy restricts the capabilities of websites that embed cross-origin resources. Currently, websites no longer have access to powerful features like SharedArrayBuffer and unthrottled timers through the Performance.now() API, as these can be exploited to infer sensitive information from cross-origin resources. If a website requires access to these features, it must signal to the browser that it intends to interact only with cross-site resources via credentialless requests (credentialless) or with resources that explicitly permit access from other origins using the Cross-Origin-Resource-Policy header (require-corp).

| COEP value | Desktop | Mobile |

|---|---|---|

unsafe-none |

86% | 88% |

require-corp |

7% | 5% |

credentialless |

2% | 2% |

The majority of websites that set the Cross-Origin-Embedder-Policy header indicate that they do not require access to the powerful features mentioned above (unsafe-none). This behavior is also the default if the COEP header is absent, meaning that websites will automatically operate under restricted access to cross-origin resources unless explicitly configured otherwise.

Cross Origin Resource Policy

Conversely, websites that serve resources can use the Cross-Origin-Resource-Policy response header to grant explicit permission for other websites to render the served resource. This header can take one of three values: same-site, allowing only requests from the same site to receive the resource; same-origin, restricting access to requests from the same origin; and cross-origin, permitting any origin to access the resource. Beyond mitigating side-channel attacks, CORP can also protect against Cross-Site Script Inclusion (XSSI). For instance, by disallowing a dynamic JavaScript resource from being served to cross-origin websites, CORP helps prevent the leaking of scripts with sensitive info.

| CORP value | Desktop | Mobile |

|---|---|---|

cross-origin |

91% | 91% |

same-origin |

5% | 5% |

same-site |

4% | 4% |

The CORP header is primarily used to allow access to the served resource from any origin, with the cross-origin value being the most commonly set. In fewer cases, the header restricts access: less than 5% of websites limit resources to the same origin, and less than 4% restrict them to the same site.

Cross Origin Opener Policy

Cross Origin Opener Policy (COOP) helps control how other web pages can open and reference the protected page. COOP protection can be explicitly disabled with unsafe-none, which is also the default behavior in absence of the header. The same-origin value allows references from pages with the same origin and same-origin-allow-popups additionally allows references with windows or tabs. Similar to the Cross Origin Embedder Policy, features like the SharedArrayBuffer and Performance.now() are restricted unless COOP is configured as same-origin.

| COOP value | Desktop | Mobile |

|---|---|---|

same-origin |

49% | 48% |

unsafe-none |

35% | 37% |

same-origin-allow-popups |

14% | 14% |

Nearly half of all observed COOP headers employ the strictest setting, same-origin.

Preventing attacks using Clear-Site-Data

The Clear-Site-Data header allows websites to easily clear browsing data associated with them, including cookies, storage, and cache. This is particularly useful as a security measure when a user logs out, ensuring that authentication tokens and other sensitive information are removed and cannot be abused. The header’s value specifies what types of data the website requests the browser to clear.

Adoption of the Clear-Site-Data header remains limited; our observations indicate that only 2,071 hosts (0.02% of all hosts) use this header. However, this functionality is primarily useful on logout pages, which the crawler does not capture. To investigate logout pages, the crawler would need to be extended to detect and interact with account registration, login, and logout functionality – an undertaking that would require quite some effort. Some progress has already been made in this area by security and privacy researchers, such as automating logins to web pages, and automating registering.

| Clear site data value | Desktop | Mobile |

|---|---|---|

"cache" |

36% | 34% |

cache |

22% | 23% |

* |

12% | 13% |

cookies |

4% | 6% |

"cache", "storage", "executionContexts" |

3% | 4% |

"cookies" |

2% | 2% |

"cache", "cookies", "storage", "executionContexts" |

2% | 2% |

"storage" |

2% | 2% |

"cache", "storage" |

1% | 1% |

cache, cookies, storage |

1% | 1% |

Clear-Site-Data headers.

Current usage data shows that the Clear-Site-Data header is predominantly used to clear cache. It’s important to note that the values in this header must be enclosed in quotation marks; for instance, cache is incorrect and should be written as "cache". Interestingly, there has been significant improvement in adherence to this syntax rule: in 2022, 65% of desktop and 63% of mobile websites were found using the incorrect cache value. However, these numbers have now dropped to 22% and 23% for desktop and mobile, respectively.

Preventing attacks using <meta>

Some security mechanisms on the web can be configured through meta tags in the source HTML of a web page, for instance the Content-Security-Policy and Referrer-Policy. This year, 0.61% and 2.53% of mobile websites enable CSP and Referrer-Policy respectively using meta tags. This year we find that there is a slight increase in the use of this method for setting the Referrer-Policy yet a slight decrease for setting CSP.

| Meta tag | Desktop | Mobile |

includes Referrer-policy |

2.7% | 2.5% |

| includes CSP | 0.6% | 0.6% |

includes not-allowed policy |

0.1% | 0.1% |

Developers sometimes also try to enable other security features by using the meta tag, which is not allowed and will thus be ignored. Using the same example as in 2022, 4976 pages try to set the X-Frame-Options using a meta tag, which will be ignored by the browser. This is an absolute increase compared to 2022, but only because there were more than twice as many pages included in the data set. Relatively, there is a slight decrease from 0.04% to 0.03% on mobile pages and 0.05% to 0.03% on desktop pages.

Web Cryptography API

Web Cryptography API is a JavaScript API for performing basic cryptographic operations on a website such as random number generation, hashing, signature generation and verification, and encryption and decryption.

| Feature | Desktop | Mobile |

|---|---|---|

CryptoGetRandomValues |

56.9% | 53.2% |

SubtleCryptoDigest |

1.9% | 1.7% |

SubtleCryptoImportKey |

1.7% | 1.6% |

CryptoAlgorithmSha256 |

1.6% | 1.3% |

CryptoAlgorithmEcdh |

1.3% | 1.3% |

CryptoAlgorithmSha512 |

0.2% | 0.2% |

CryptoAlgorithmAesCbc |

0.2% | 0.1% |

CryptoAlgorithmSha1 |

0.2% | 0.2% |

SubtleCryptoEncrypt |

0.2% | 0.1% |

SubtleCryptoSign |

0.1% | 0.1% |

In comparison to the last Almanac, the CryptoGetRandomValues continued to drop and it did so at a much higher rate in the past two years, dropping down to 53%. Despite that drop, it clearly continues to be the most adopted feature, far ahead of the other features. After CryptoGeRandomValues, the next five most used features have become more widely adopted, rising from under 0.7% to adoption rates between 1.3% and 2%.

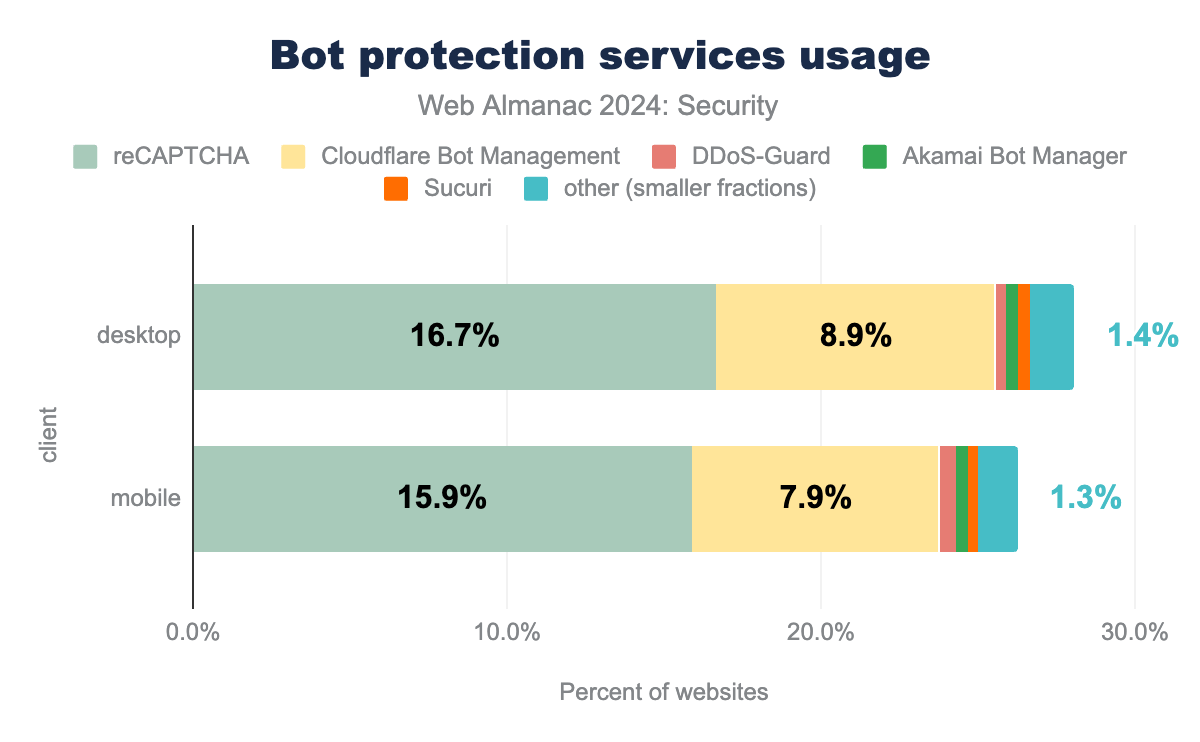

Bot protection services

Because bad bots remain a significant issue on the modern web, we see that the adoption of protections against bots has continued to rise. We observe another jump in adoption from 29% of desktop sites and 26% of mobile sites in 2022 to 33% and 32% respectively now. It seems that developers have invested in protecting more mobile websites, bringing the number of protected desktop and mobile sites closer together.

reCAPTCHA remains the largest protection mechanism in use, but has seen a reduction in its use. In comparison, Cloudflare Bot Management has seen an increase in adoption and remains the second largest protection in use.

HTML sanitization

A new addition to major browsers are the setHTMLUnsafe and ParseHTMLUnsafe APIs, that allow a developer to use a declarative shadow DOM from JavaScript. When a developer uses custom HTML components from JavaScript that include a definition for a declarative shadow DOM using <template shadowrootmode="open">...</template>, using innerHTML to place this component on the page will not work as expected. This can be prevented by using the alternative setHTMLUnsafe that makes sure the declarative shadow DOM is taken into account.

When using these APIs, developers must be careful to only pass already safe values to these APIs because as the names imply they are unsafe, meaning they will not sanitize input given, which may lead to XSS attacks.

| Feature | Desktop | Mobile |

|---|---|---|

ParseHTMLUnsafe |

6 | 6 |

SetHTMLUnsafe |

2 | 2 |

These APIs are new, so low adoption is to be expected. We found only 6 pages in total using parseHTMLUnsafe and 2 using setHTMLUnsafe, which is an extremely small number relative to the number of pages visited.

Drivers of security mechanism adoption

Web developers can have many reasons to adopt more security practices. The three primary ones are:

- Societal: in certain countries there is more security-oriented education, or there may be local laws that take more punitive measures in case of a data breach or other cybersecurity-related incident

- Technological: depending on the technology stack in use, it might be easier to adopt security features. Some features might not be supported and would require additional effort to implement. Adding to that, certain vendors of software might enable security features by default in their products or hosted solutions

- Popularity: widely popular websites may face more targeted attacks than a website that is less known, but may also attract more security researchers or white hat hackers to look at their products, helping the site implement more security features correctly

Location of website

The location where a website is hosted or its developers are based can often have impacts on adoption of security features. The security awareness among developers will play a role, as they cannot implement features that they aren’t aware of. Additionally, local laws can sometimes mandate the adoption of certain security practices.

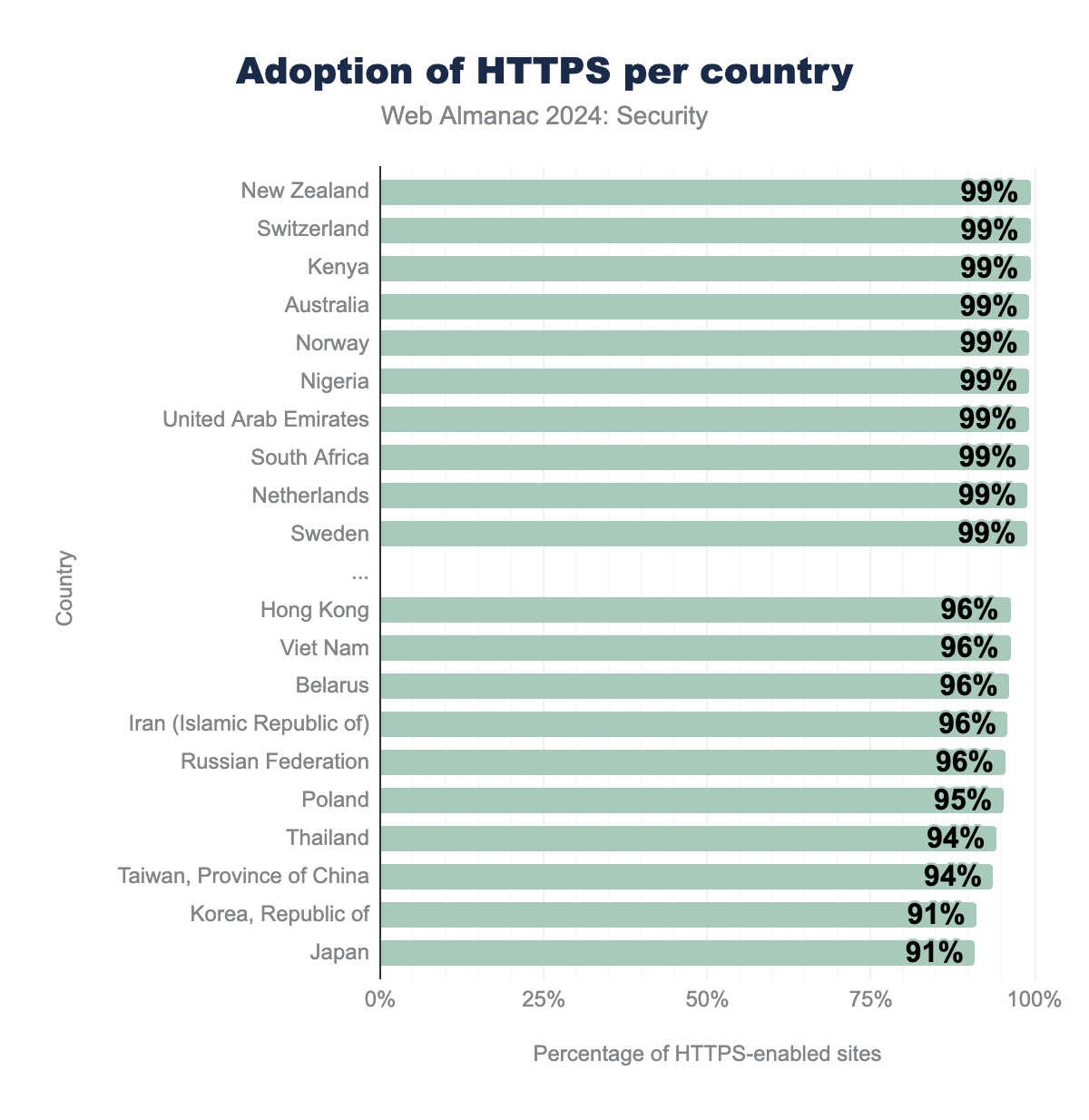

New Zealand continues to lead in the adoption of HTTPS websites, however, many countries are following the adoption extremely closely as the top 9 countries all reach adoption of over 99%! Also the trailing 10 countries have all seen a rise in HTTPS adoption by 9% to 10%, with all countries now reaching adoption above 90%! This shows that almost all countries continue their efforts in making HTTPS the default mode.

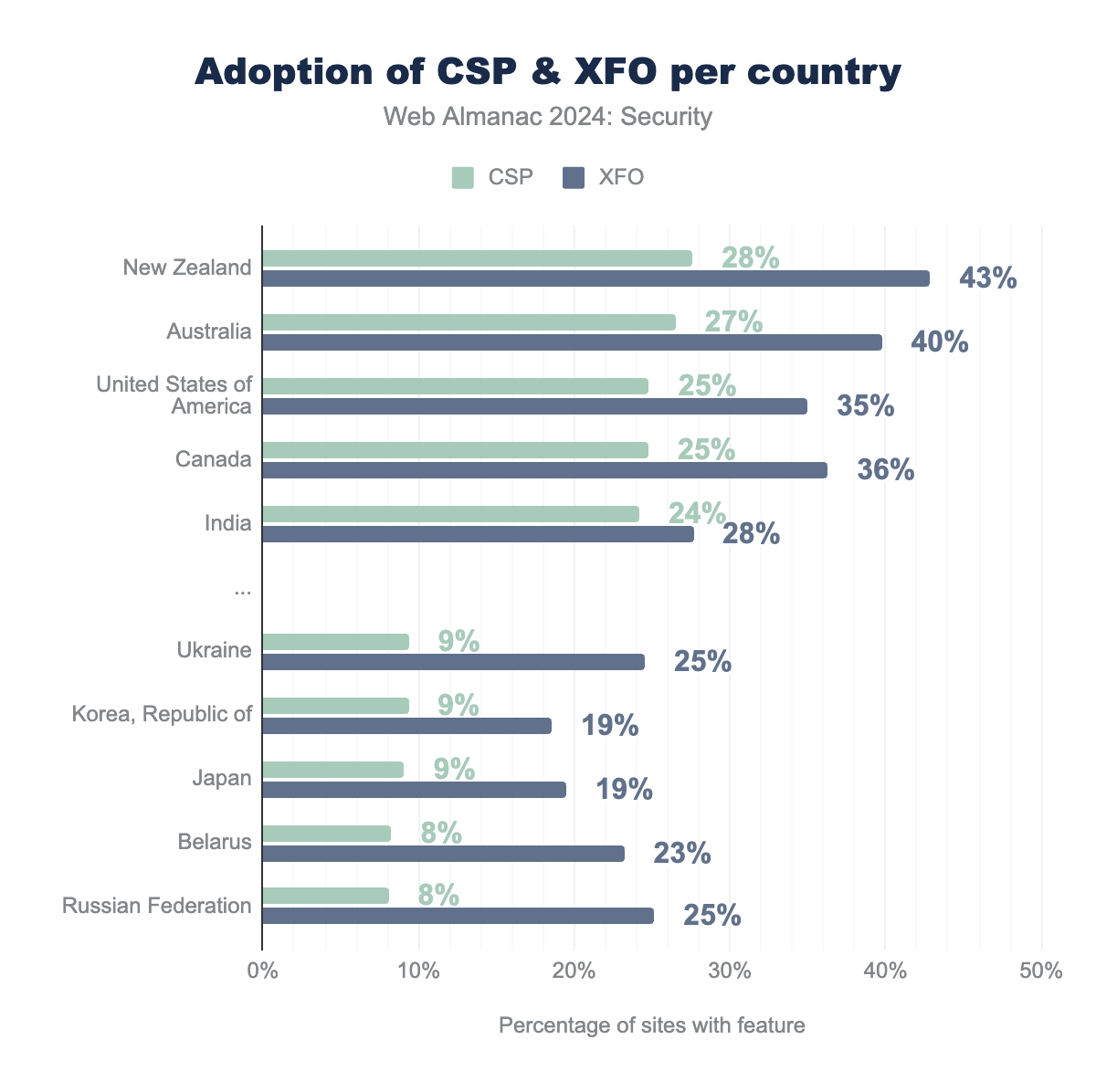

We see that the top 5 countries in terms of CSP adoption have CSP enabled on almost a quarter of their websites. The trailing countries have also seen an increase in the use of CSP, albeit a more moderate one. In general the adoption of both XFO and CSP remains very varied among countries, and the gap between CSP and XFO remains equally large if not larger compared to 2022, reaching up to 15%.

Technology stack

Many sites on the current web are made using large CMS systems. These may enable security features by default to protect their users.

| Technology | Security features |

|---|---|

| Wix | Strict-Transport-Security (99.9%),X-Content-Type-Options (99.9%) |

| Blogger | X-Content-Type-Options (99.8%),X-XSS-Protection (99.8%) |

| Squarespace | Strict-Transport-Security (98.9%),X-Content-Type-Options (99.1%) |

| Drupal | X-Content-Type-Options (90.3%),X-Frame-Options (87.9%) |

| Google Sites | Content-Security-Policy (99.9%),Cross-Origin-Opener-Policy (99.8%),Cross-Origin-Resource-Policy (99.8%),Referrer-Policy (99.8%),X-Content-Type-Options (99.9%),X-Frame-Options (99.9%),X-XSS-Protection (99.9%) |

| Medium |

Content-Security-Policy (99.2%),Strict-Transport-Security (96.4%), |

| Substack |

Strict-Transport-Security (100%),

|

| Wagtail | Referrer-Policy (55.2%),X-Content-Type-Options (61.7%),X-Frame-Options (72.1%) |

| Plone | Strict-Transport-Security (57.1%),X-Frame-Options (75.2%) |

It’s clear that many major CMS’s that are hosted by the providing company and where only content is created by users, such as Wix, SquareSpace, Google Sites, Medium and Substack, roll out security protections widely, showing adoption of HSTS, X-Content-Type-Options or X-XSS-Protection in the upper 99% adoption rates. Google sites continues to be the CMS that has the highest number of security features in place.

For CMS’s that can be easily self-hosted such as Plone or Wagtail, it is more difficult to control the rollout of features because the CMS creators have no way to influence the update behavior of users. Websites hosted using these CMS’s could be online without change in security features for a long time.

Website popularity

Large websites often have a high number of visitors and registered users, of which they might store highly sensitive data. This means they likely attract more attackers and are thus more prone to targeted attacks. Additionally, when an attack succeeds, these websites could be fined or sued, costing them money and/or reputational damage. Therefore, it can be expected that popular websites invest more in their security to secure their users.

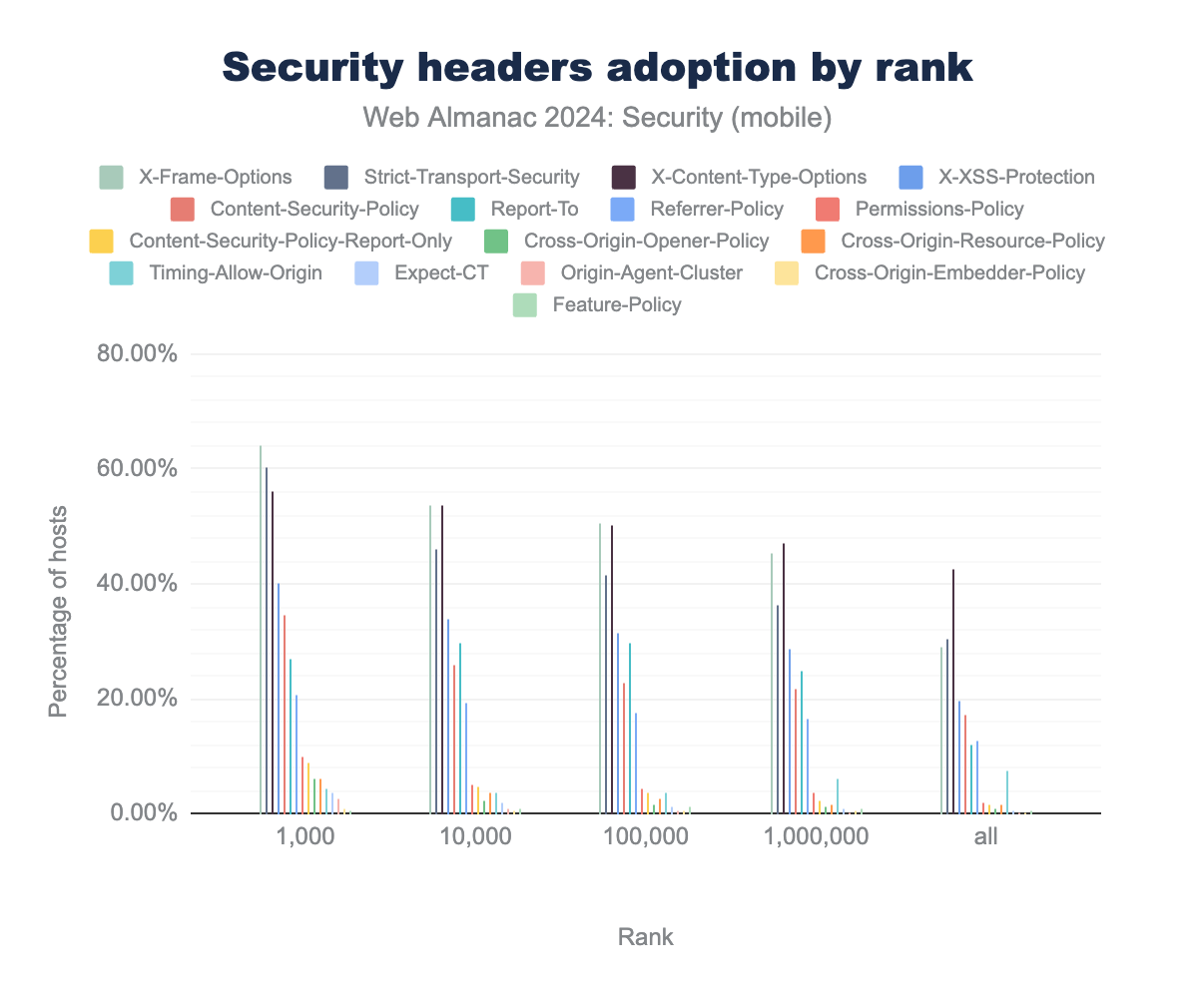

We find that most headers, including the most popular ones: X-Frame-Options, Strict-Transport-Security, X-Content-Type-Options, X-XSS-Protection and Content-Security-Policy, always have higher adoptions for more popular sites on mobile. 64.3% of the top 1000 sites on mobile have HSTS enabled. This means the top 1000 websites are more invested in only sending traffic over HTTPS. Less popular sites can still have HTTPS enabled, but don’t add a Strict-Transport-Security header as often, which may lead users to repeatedly visit the site over plain HTTP.

Website category

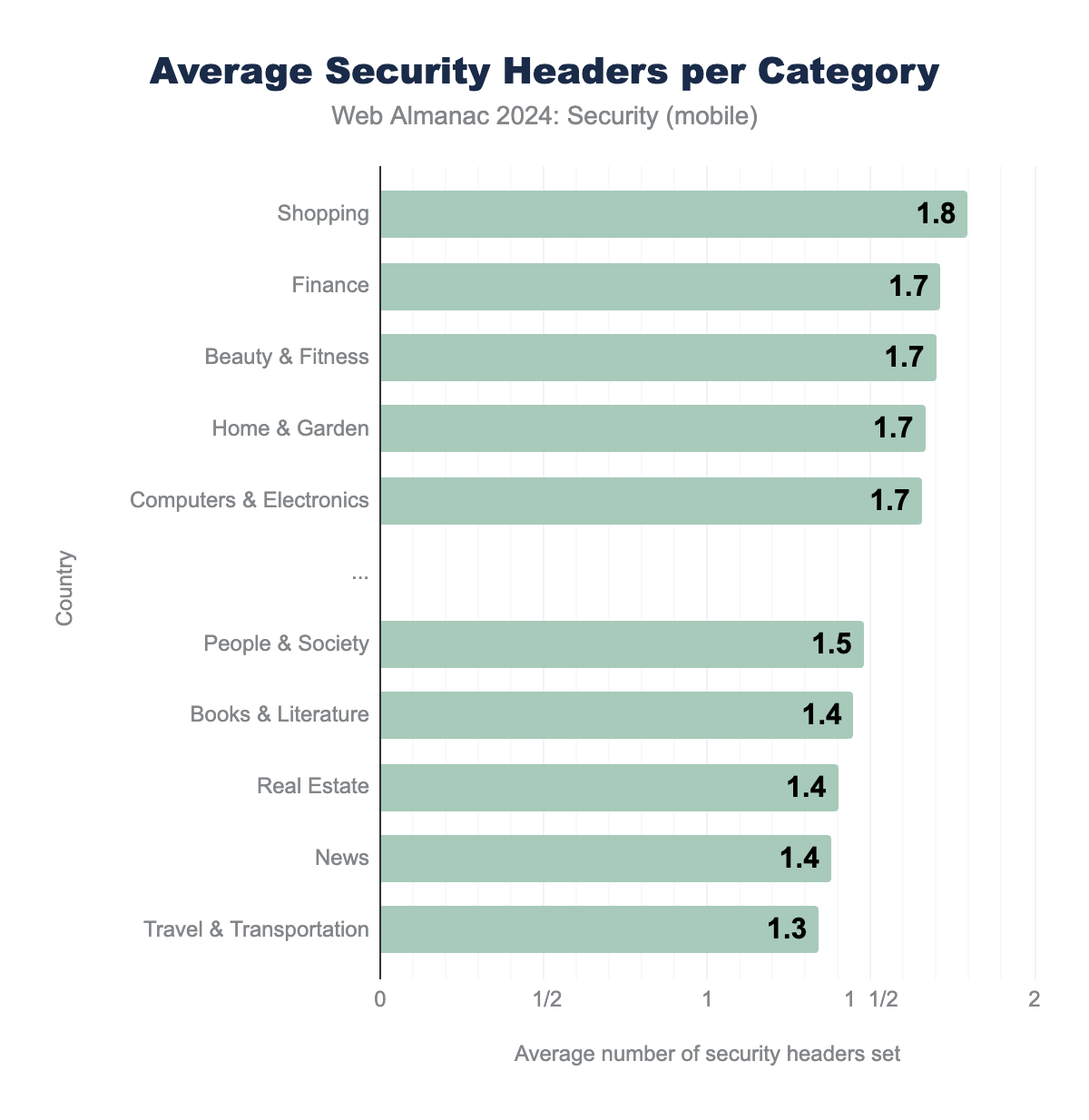

In some industries, developers might keep more up to date with security features they may be able to use to better secure their sites.

We find that there is a subtle difference in the average number of security headers used depending on the categorization of the website. This number does not directly show the overall security of these sites, but might give an insight into which categories of industry are inclined to implement more security features. We see that shopping and finance lead the list, both industries that deal with sensitive information and high amounts of monetary transactions, which may be reasons to invest in security. At the bottom of the list we see news and travel & transportation. Both are categories in which a lot of sites will host content relating to their respective topics, but may not handle much sensitive data compared to sites in the top categories on the list. In general, this trend seems to be weak.

Malpractices on the Web

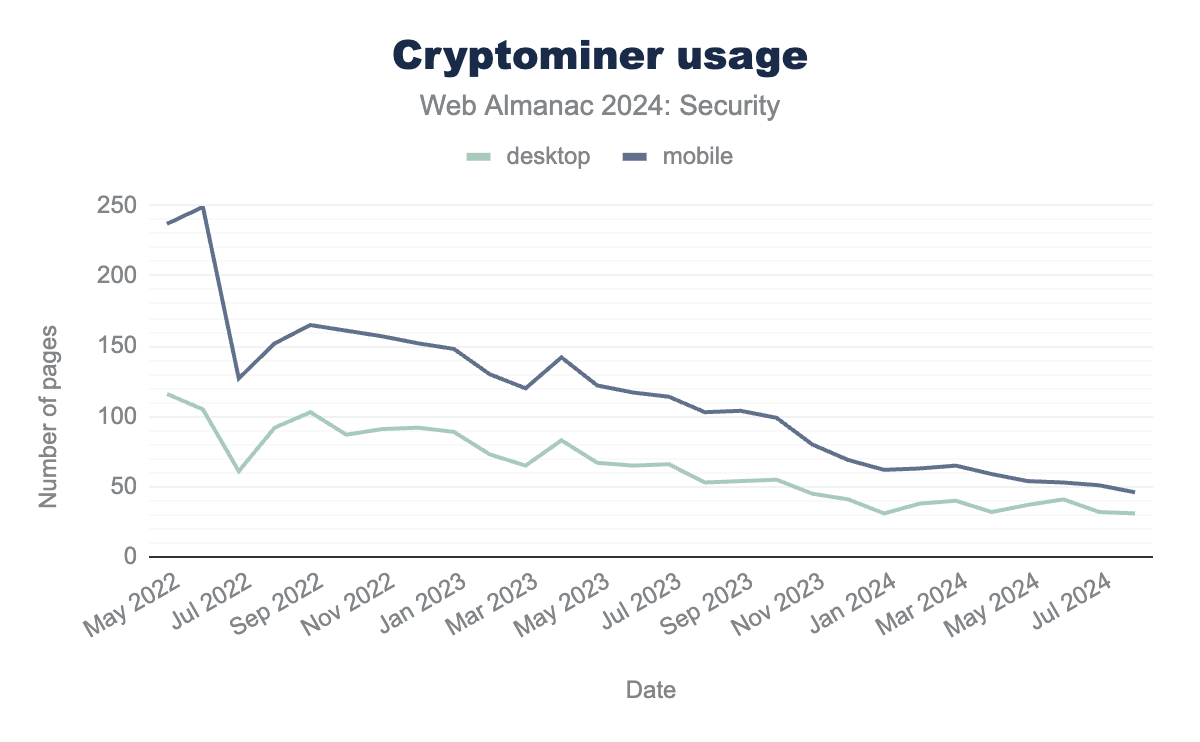

Although cryptocurrencies remain popular, the number of cryptominers on the web has continued to decrease over the past two years, with no notable spikes in usage anymore as was described in the 2022 edition of the Web Almanac.

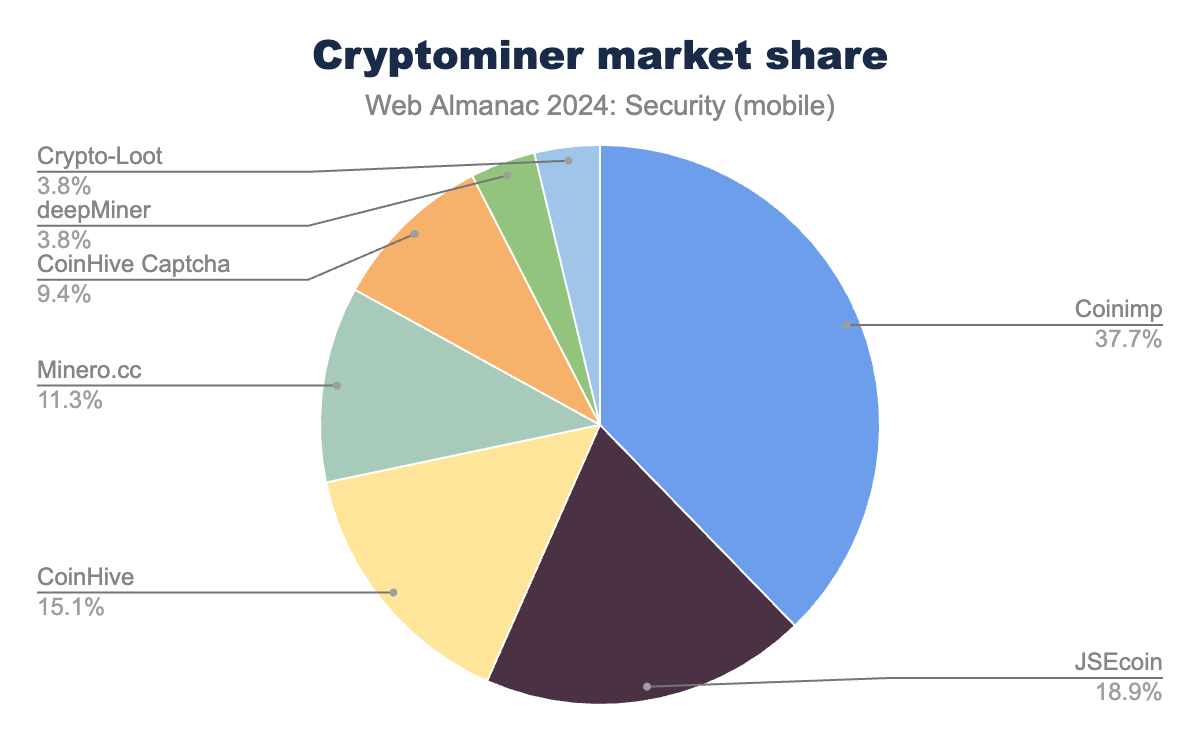

When looking at the cryptominer share, we see that part of the Coinimp share has been overtaken by JSEcoin, while other miners have remained relatively stable, seeing only minor changes. With the low number of cryptominers found on the web, these relative changes are still quite minor.

One should note that the results shown here may be a underrepresentation of the actual state of the websites infected with cryptominers. Since our crawler is run once a month, not all websites that run a cryptominer can be discovered. For example, if a website is only infected for several days, it might not be detected.

Security misconfigurations and oversights

While the presence of security policies suggests that website administrators are actively working to secure their sites, proper configuration of these policies is crucial. In the following section, we will highlight some observed misconfigurations that could compromise security.

Unsupported policies defined by <meta>

It’s crucial for developers to understand where specific security policies should be defined. For instance, while a secure policy might be defined through a <meta> tag, it could be ignored by the browser if it’s not supported there, potentially leaving the application vulnerable to attacks.

Although the Content Security Policy can be defined using a <meta> tag, its frame-ancestors and sandbox directives are not supported in this context. Despite this, our observations show that 1.70% of pages on desktop and 1.26% on mobile incorrectly used the frame-ancestors directive in the <meta> tag. This is far lower for the disallowed sandbox directive, which was defined for less than 0.01%.

COEP, CORP and COOP confusion

Due to their similar naming and purpose, the COEP, CORP and COOP are sometimes difficult to discern. However, assigning unsupported values to these headers can have a detrimental effect on the website’s security.

| Invalid COEP value | Desktop | Mobile |

|---|---|---|

same-origin |

3.22% | 3.05% |

cross-origin |

0.30% | 0.23% |

same-site |

0.06% | 0.04% |

For instance, around 3% of observed COEP headers mistakenly use the unsupported value same-origin. When this occurs, browsers revert to the default behavior of allowing any cross-origin resource to be embedded, while restricting access to features like SharedArrayBuffer and unthrottled use of Performance.now(). This fallback does not inherently reduce security unless the site administrator intended to set same-origin for CORP or COOP, where it is a valid value.

Additionally, only 0.26% of observed COOP headers were set to cross-origin and just 0.02% of CORP headers used the value unsafe-none. Even if these values were mistakenly applied to the wrong headers, they represent the most permissive policies available. Therefore, these misconfigurations are not considered to decrease security.

In addition to cases where valid values intended for one header were mistakenly used for another, we identified several minor instances of syntactical errors across various headers. However, each of these errors accounted for less than 1% of the total observed headers, suggesting that while such mistakes exist, they are relatively infrequent.

Timing-Allow-Origin wildcards

Timing-Allow-Origin is a response header that allows a server to specify a list of origins that are allowed to see values of attributes obtained through features of the Resource Timing API. This means that an origin listed in this header can access detailed timestamps regarding the connection that is being made to the server, such as the time at the start of the TCP connection, start of the request and start of the response.

When CORS is in effect, many of these timings (including the ones listed above) are returned as 0 to prevent cross-origin leaks. By listing an origin in the Timing-Allow-Origin header this restriction is lifted.

Allowing different origins access to this information should be done with care, because using this information the site loading the resource can potentially execute timing attacks. In our analysis we find that out of all responses with a Timing-Allow-Origin header present, 83% percent of Timing-Allow-Origin headers contain the wildcard value, thereby allowing any origin to access the fine grained timing information.

Timing-Allow-Origin that are set to the wildcard (*) value.

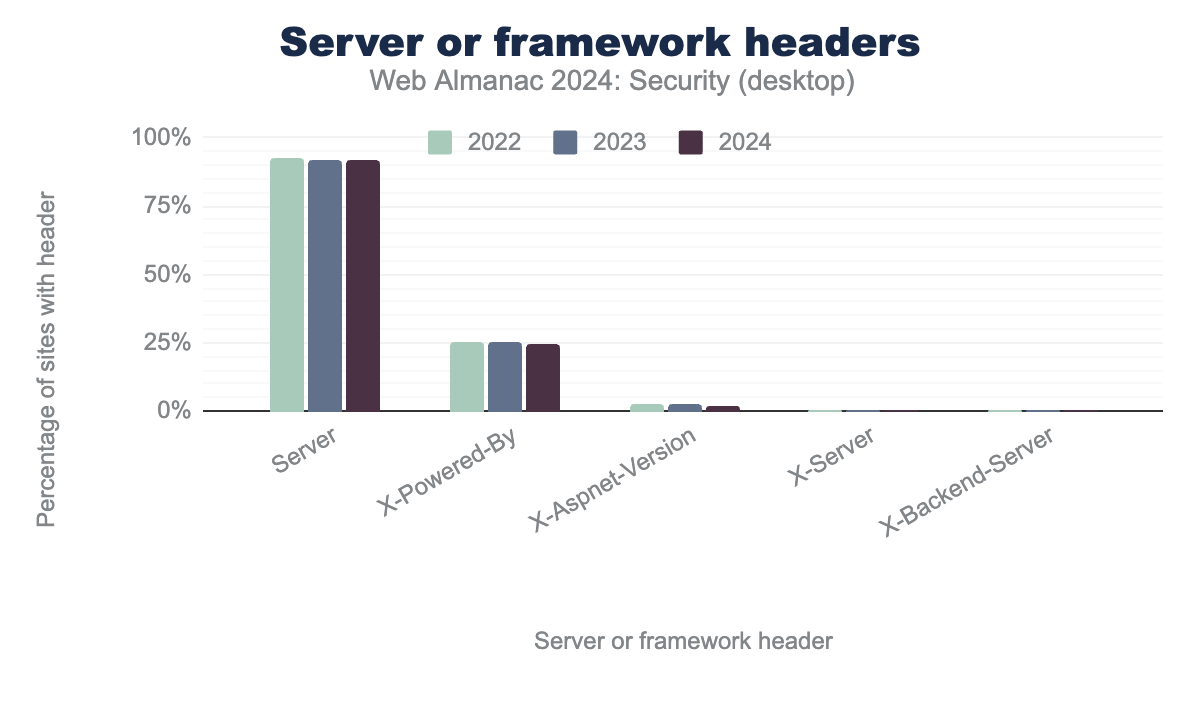

Missing suppression of server information headers

While security by obscurity is generally considered bad practice, web applications can still benefit from withholding excessive information about the server or framework in use. Although attackers can still fingerprint certain details, minimizing exposure - particularly regarding specific version numbers - can reduce the likelihood of the application being targeted in automated vulnerability scans.

This information is usually reported in headers such as Server, X-Server, X-Backend-Server, X-Powered-By, X-Aspnet-Version.

Server header this is 92%, 92% and 92% for years 2022, 2023, 2024 respectively. For the X-Powered-By this is 25%, 25% and 24% respectively. For the X-Aspnet-Version header this is 3%, 2% and 2% respectively. For the X-Server header this is 0%, 0% and 0% respectively. For the X-Backend-Server this is 0%, 0% and 0% respectively.The most commonly exposed header is the Server header, which reveals the software running on the server. This is followed by the X-Powered-By header, which discloses the technologies used by the server.

| Header value | Desktop | Mobile |

|---|---|---|

PHP/7.4.33 |

9.1% | 9.4% |

PHP/7.3.33 |

4.6% | 5.4% |

PHP/5.3.3 |

2.6% | 2.8% |

PHP/5.6.40 |

2.5% | 2.6% |

PHP/7.4.29 |

1.7% | 2.2% |

PHP/7.2.34 |

1.7% | 1.8% |

PHP/8.0.30 |

1.3% | 1.4% |

PHP/8.1.28 |

1.1% | 1.1% |

PHP/8.1.27 |

1.0% | 1.1% |

PHP/7.1.33 |

1.0% | 1.0% |

X-Powered-By header values with specific framework version.

Examining the most common values for the Server and X-Powered-By headers, we found that especially the X-Powered-By header specifies versions, with the top 10 values revealing specific PHP versions. For both desktop and mobile, at least 25% of X-Powered-By headers contain this information. This header is likely enabled by default on the observed web servers. While it can be useful for analytics, the header’s benefits are limited, and thus it warrants to be disabled by default. However, disabling this header alone does not address the security risks of outdated servers; regularly updating the server remains crucial.

Missing suppression of Server-Timing header

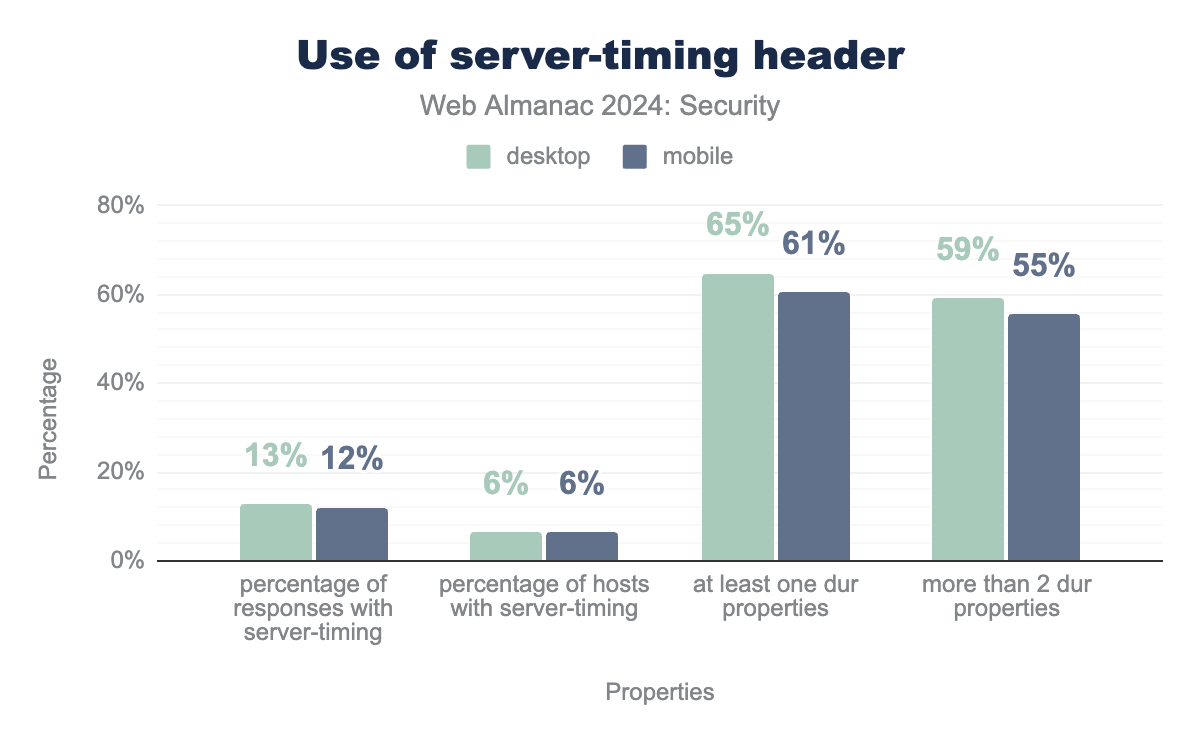

The Server-Timing header is defined in a W3C Editor’s Draft as a header that can be used to communicate about server performance metrics. A developer can send metrics containing zero or more properties. One of the specified properties is the dur property, that can be used to communicate millisecond-accurate timings that contain the duration of a specific action on the server.

We find that server-timing is used by 6.4% of internet hosts. Over 60% of those hosts include at least one dur property in their response and over 55% even send more than two. This means that these sites are exposing server-side process durations directly to a client, which can be used for exploiting. Because the server-timing may contain sensitive information, the use is now restricted to the same origin, except when Timing-Allow-Origin is used by the developer as discussed in the previous section. However, timing attacks can still be exploited directly against servers without the need to access cross-origin data.

dur properties. Percentage of responses with server-timing header is 13% for desktop and 12% for mobile. Percentage of hosts with server-timing header is 6% for both desktop and mobile. Percentage of hosts with server-timing header with at least one dur property is 65% for desktop and 61% for mobile. Percentage of hosts with server-timing header with more than two dur properties is 59% for desktop and 55% for mobile.dur properties.

.well-known URIs

.well-known URIs are used as a way to designate specific locations to data or services related to the overall website. A well-known URI is a URI whose path component begins with the characters /.well-known/.

security.txt

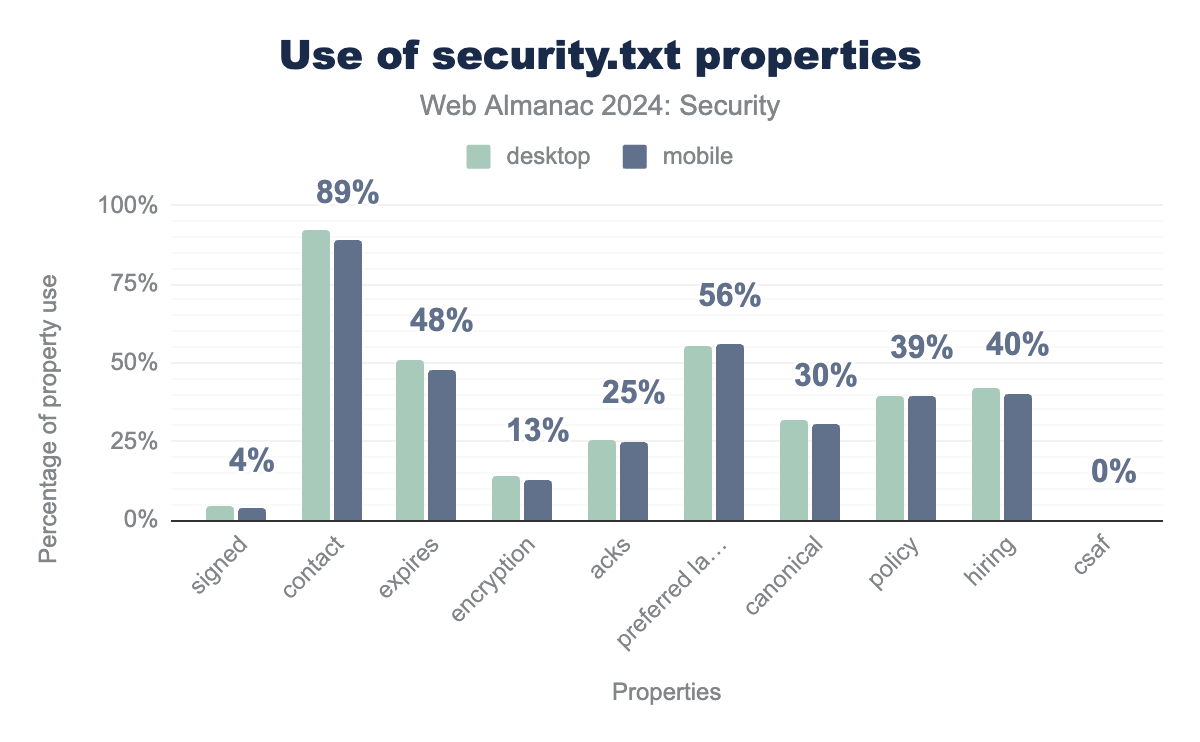

security.txt is a file format that can be used by websites to communicate information regarding vulnerability reporting in a standard way. Website developers can provide contact details, PGP key, policy, and other information in this file. White hat hackers and penetration testers can then use this information to report potential vulnerabilities they find during their security analyses. Our analysis shows that 1% of websites currently use a security.txt file, showing that they are actively working on improving their site’s security.

signed is used in 4% of files for both desktop and mobile, contact is used in 92% of files for desktop and 89% for mobile. expires is used in 51% of files for desktop and 48% for mobile, encryption is used in 14% of files for desktop and 13% for mobile, acks is used in 26% of files for desktop and 25% for mobile, preferred language is used for 55% of files for desktop and 56% for mobile, canonical is used for 32% of files for desktop and 30% for mobile, policy is used in 39% files for both desktop and mobile, and csaf is used in 0% of files for both desktop and mobile.Most of the security.txt files include contact information (88.8%) and a preferred language (56.0%). This year, 47.9% of security.txt files define an expiry, which is a giant jump compared to the 2022 2.3%. This can largely be explained by an update to the methodology, as the analysis only includes text files this year instead of simply all responses with code 200, thereby significantly lowering the false positive rate. It does mean that less than half of the sites that use security.txt are following the standard that (among other requirements) defines the expires property as required. Interestingly, only 39% of the security.txt files define a policy, which is the space developers can indicate what steps a white hat hacker that found a vulnerability should take to report the vulnerability.

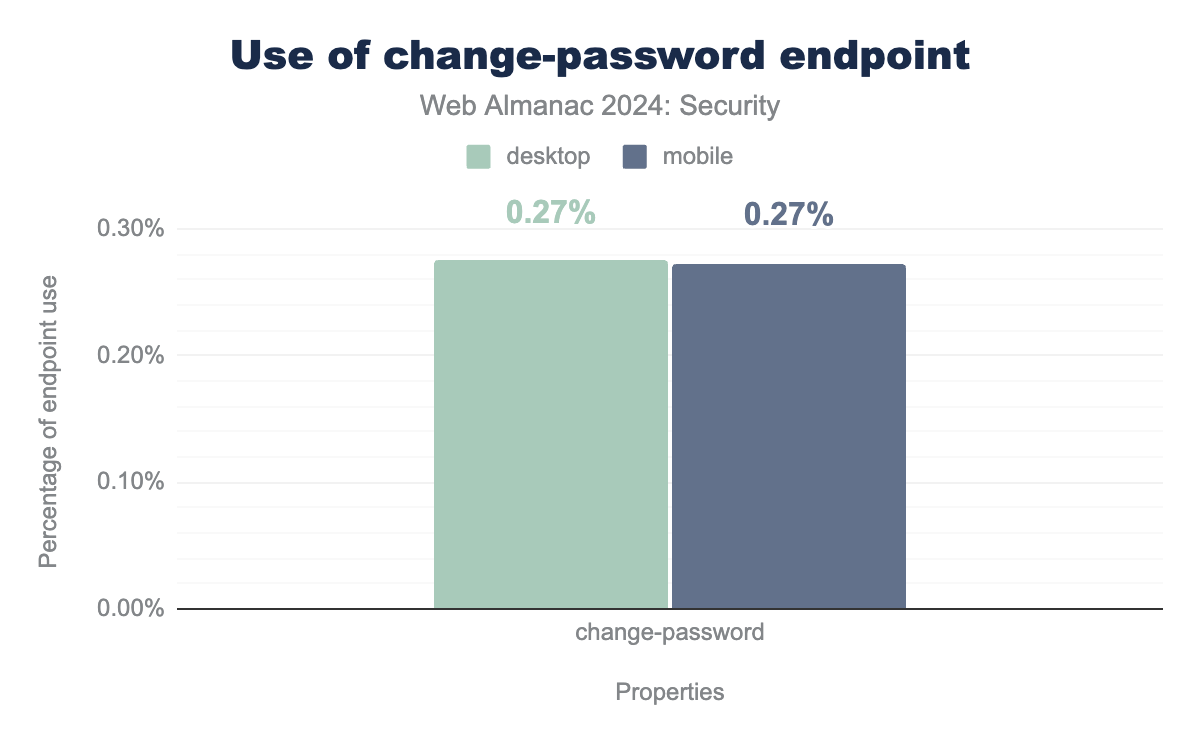

change-password

The change-password well-known URI is a W3C specification in the editor’s draft state, which is the same state it was in in 2022. This specific well-known URI was suggested as a way for users and softwares to easily identify the link to be used for changing passwords, which means external resources can easily link to that page.

The adoption remains very low. At 0.27% for both mobile and desktop sites it slightly decreased for desktop sites from 0.28% in 2022. Due to the slow standardization process it is not unexpected that the adoption does not change much. We also repeat that websites without authentication mechanisms have no use for this url, which means it would be useless for them to implement it.

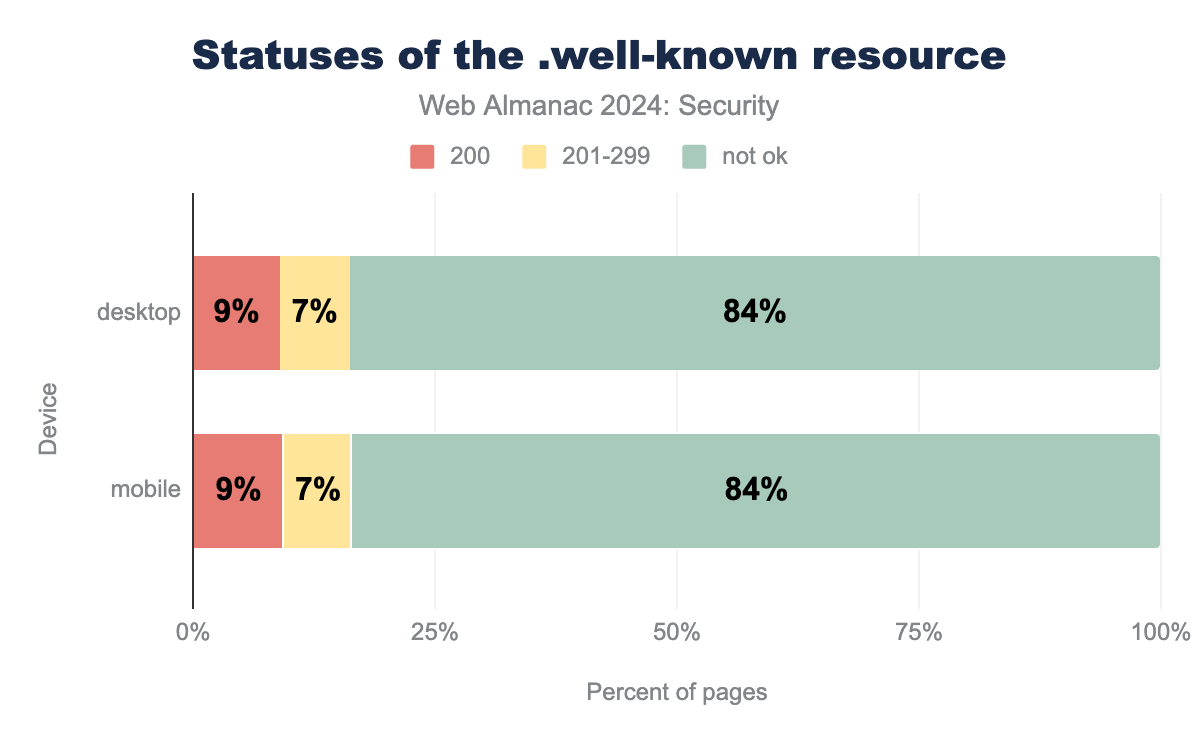

Detecting status code reliability

In a specification that is also still an editor’s draft, like in 2022, a particular well-known URI is defined to determine the reliability of a website’s HTTP response status code. The idea behind this well-known URI is that it should never exist in any website, which in turn means navigating to this well-known URI should never result in a response with an ok-status. If it redirects and returns an “ok-status”, that means the website’s status codes are not reliable. This could be the case when a redirect to a specific ’404 not found’ error page occurs, but that page is served with an ok status.

.well-known endpoint to assess status code reliability.

We find a similar distribution as in 2022, where 83.6% of pages respond with a not-ok status, which is the expected outcome. Again, one reason that these figures may not change much is the fact that the standard is stuck in the editor’s draft status and the standardization is slow.

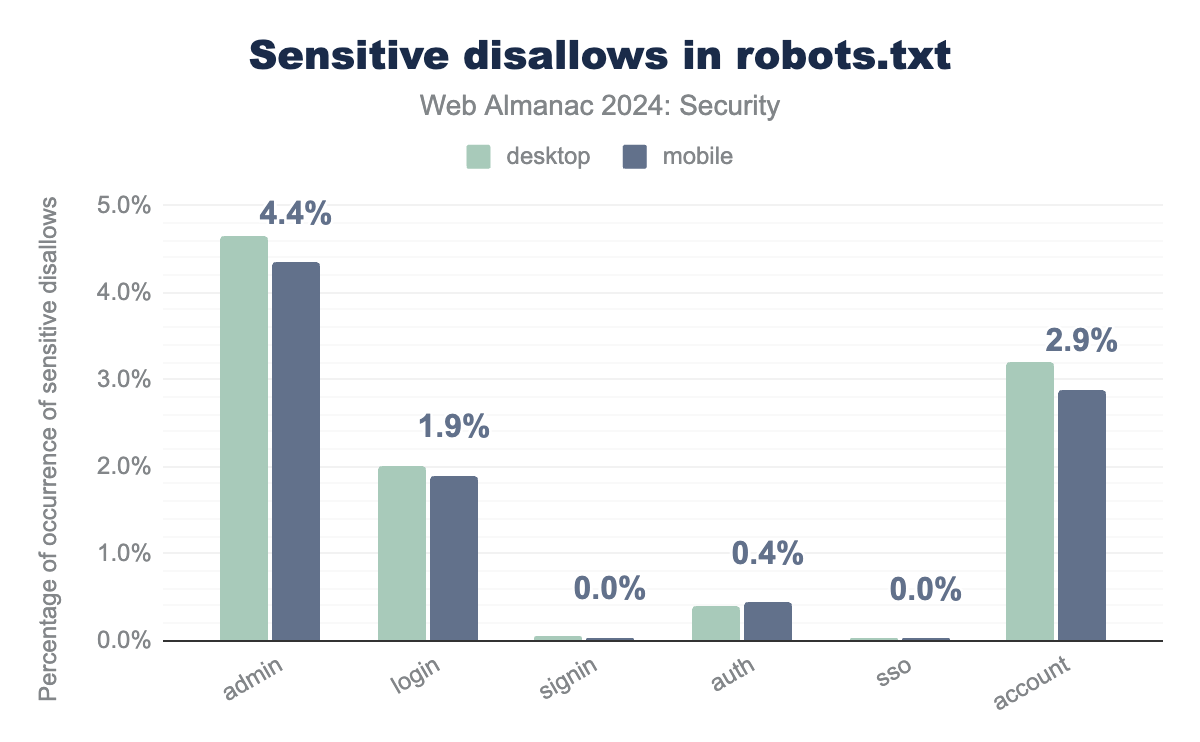

Sensitive endpoints in robots.txt

Finally, we check whether or not robots.txt includes possibly sensitive endpoints. By using this information, hackers may be able to select websites or endpoints to target based on the exclusion in robots.txt.

admin was found in a disallow rule for 4.7% of hosts on desktop and 4.4% on mobile, login for 2.0% on desktop and 1.9% on mobile, signin for 0.0% on both desktop and mobile, auth for 0.4% on both desktop and mobile, account for 3.2% on desktop and 2.9% on mobile.We see that around 4.3% of websites include at least one admin entry in their robots.txt file.

This may be used to find an admin-only section of the website, which would otherwise be hidden and finding it would rely on attempting to visit specific subpages under that url. login, signin, auth, sso and account point to the existence of a mechanism where users can log in using an account they created or received. Each of these endpoints are included in the robots.txt of a number of sites (some of which may be overlapping), with account being the more popular one at 2.9% of websites.

Indirect resellers in ads.txt

The ads.txt file is a standardized format that allows websites to specify which companies are authorized to sell or resell their digital ad space within the complex landscape of programmatic advertising. Companies can be listed as either direct sellers or indirect resellers. Indirect resellers, however, can leave publishers - sites hosting the ads.txt file - more vulnerable to ad fraud because they offer less control over who purchases ad space. This vulnerability was exploited in 2019 by the so-called 404bot scam, resulting in millions of dollars in lost revenue.

By refraining from listing indirect sellers, website owners help prevent unauthorized reselling and reduce ad fraud, thereby enhancing the security and integrity of their ad transactions. Among publishers that host an ads.txt file, 77% for desktop and 42.4% for mobile avoid resellers entirely, curbing potential fraud.

Conclusion

Overall, this year’s analysis highlights promising trends in web security. HTTPS adoption is nearing 100%, with Let’s Encrypt leading the charge by issuing over half of all certificates, making secure connections more accessible. Although the overall adoption of security policies remains limited, it’s encouraging to see steady progress with key security headers. Secure-by-default measures, like the SameSite=Lax attribute for cookies, are driving website administrators to at least consider important security practices.

However, attention must also be given to poor configurations or even misconfigurations that can weaken these protections. Issues like invalid directives or poorly defined policies can prevent browsers from enforcing security effectively. For instance, 82.5% of all Timing-Allow-Origin headers allow any origin to access detailed timing information, which could be abused in timing attacks. Similarly, only 1% of websites enable security issue reporting via security.txt, and many still expose their PHP version, an unnecessary risk that can reveal potential vulnerabilities. On the bright side, most of these issues represent low-hanging fruit—addressing them typically requires minimal changes to website implementations.

As the number of security policies grows, it’s essential for policymakers to focus on reducing complexity. Reducing implementation friction will make adoption easier and minimize common mistakes. For example, the introduction of cross-origin headers designed to prevent cross-site leaks and microarchitectural attacks has already caused confusion, with directives from one policy mistakenly applied to another.

Although new attacks will undoubtedly emerge in the future, demanding new protections, the openness of the security community plays a crucial role in developing sound solutions. As we’ve seen, the adoption of new measures may take time, but progress is being made. Each step forward brings us closer to a more resilient and secure Web for everyone.